import numpy as np

import pandas as pd

import scipy as scp

import matplotlib.pyplot as plt

import math

import matplotlib.cm as cmx

from mssm.models import *

from mssm.src.python.compare import compare_CDL

from mssmViz.plot import *

from mssmViz.sim import *

from mssmViz.extract import *

import matplotlib.style as mplstyle

mplstyle.use('fast')

np.set_printoptions(suppress=True)

nc = 10

colors = [cmx.tab20(x) for x in np.linspace(0.0,min(0.1*nc,1.0),nc)]

single_size = 8/2.54

mssm Tutorial

With mssm we can estimate a broad variety of smooth models as they are also supported in mgcv (Wood, 2017). Terms that are available in both mgcv and mssm include regular univariate smooth terms, binary smooth terms, Tensor smooth interactions, by-factor smooth terms (ordered and not ordered), and random non-linear smooth terms. Like mgcv, mssm also supports random effects (random intercepts, random slopes, and as mentioned random smooths). By default a B-spline basis is used by mssm for all smooth terms and penalties are either difference (Eilers & Marx, 2010) or identity penalties (Kernel penalties, Marra & Wood (2012) are also supported).

Like mgcv, mssm supports estimation of conventional Generalized additive (mixed) models. Currently, Binomial models (N >= 1), Gamma models, Inverse Gaussian, Poisson, and Gaussian models are supported! mssm uses sparse matrices and is thus particularly well equipped to handle models of multi-level data, including many random effects! mssm also supports estimation of GAMMs of location, scale, and shape parameters (“GAMMLSS”; see Rigby & Stasinopoulos, 2005) and even more general smooth models (“GSMMs”) as defined by Wood, Pya, & Säfken (2016).

mssm automatically figures out the right amount of penalization for all terms and all these models using a combination of the approaches discussed by Wood, Pya, & Säfken (2016), Wood & Fasiolo (2017), Wood, Shaddick, & Augustin, (2017). Alternatively, the L-qEFS update by Krause et al. (submitted) can be used to select the regularization/penalization parameters. This update is particularly attrative when working with general smooth models, since it only requires researchers to code up the log-likelihood and (optionally) ways to compute its gradient in order to estimate and regularize the model. Krause et al. (submitted) provides a detailed description of the different algorithms implemented in the mssm toolbox.

In contrast, this tutorial focuses on practical application of the mssm toolbox. It contains:

a brief discussion of the structure your data should have

an introduction to the

Formulaclass, including a conversion table if you are familiar withmgcvsyntax and just want to get startedan extensive section of examples involving Gaussian additive models so that you can get familiar with the

Formulaclass and the different terms supported bymssmexamples on how to estimate Generalized additive models and even more general models (i.e., GAMMLSS and GSMMs)

a section on advanced topics, focused on model selection, posterior sampling, and working with the

GSMMclass

Along the way, this tutorial introduces the plot functions available in mssmViz, which can be used to visualize model predictions and to asses model validity via residual plots for all models estimated by mssm. This tutorial assumes that you are already familiar with the idea behind GAMMS (and perhaps even GAMMLSS models; Rigby & Stasinopoulos, 2005) and does not aim to provivde a thorough introduction to their theory. Instead, the aim is to quickly show you how to fit, inspect, validate, and select between these models with mssm. If you want to learn more about the theory behind GAMMS (and GAMMLSS models), head to the reference section at the end of the tutorial, which contains pointers to papers and books focused on GAMM & GAMMLSS models.

Data structure

# Import some simulated data

dat = pd.read_csv('https://raw.githubusercontent.com/JoKra1/mssmViz/main/data/GAMM/sim_dat.csv')

# mssm requires that the data-type for variables used as factors is 'O'=object

dat = dat.astype({'series': 'O',

'cond':'O',

'sub':'O',

'series':'O'})

Some remarks about the desired layout of the data to be usable with the GAMM class:

Data has to be provided in a

pandas.DataFrame.The dependent variable can contain NAs, which will be taken care of. No NAs should be present in any of the predictor columns!

The data-type (dtype) for numeric columns should be float or int. Categorical predictors need to have the object datatype (see code above).

No specific row/column sorting is required.

Exception to the last point:

If you want to approximate the computations for random smooth models of multi-level time-series data or want to include an “ar1” model of the residuals (see section on advanced topics), the data should be ordered according to series, then time: the ordered (increasing) time-series corresponding to the first series should make up the first X rows. The ordered time-series corresponding to the next series should make up the next Y rows, and so on. More specifically, what matters is that individual series are making up a time-ordered sub-block of dat. So you could also have a dataframe that starts with a series labeled 0, then a series labeled 115, then a series labeled X. As long as any series in the data is not interrupted by rows corresponding to any other series it will be fine.

# Take a look at the data:

dat.head()

| y | time | series | cond | sub | x | |

|---|---|---|---|---|---|---|

| 0 | 7.005558 | 0 | 0 | a | 0 | 9.817736 |

| 1 | 11.122316 | 20 | 0 | a | 0 | 10.262371 |

| 2 | 4.766720 | 40 | 0 | a | 0 | 10.445887 |

| 3 | 2.952046 | 60 | 0 | a | 0 | 8.481554 |

| 4 | 7.463034 | 80 | 0 | a | 0 | 10.180660 |

# To make sure the last point raised above is met - we can call the following.

# This does not hurt, even if we do not want to rely on approximate computations or don't need an "ar1" model.

dat = dat.sort_values(['series', 'time'], ascending=[True, True])

The Formula class

At the core of each model - independent of whether you want to work with GAMMs, GAMMLSS, or even GSMM - is the Formula. A Formula is used to specify the additive model of a particular parameter of the likelihood/response family of the model. With GAMMs we model a single parameter: the mean of the independent response variables. Thus we need to specify only a single Formula. With GAMMLSS and GSMM, we can model multiple parameters of the response variables/likelihood, and thus we will often have to specify multiple Formulas.

Like most classes and functions implemented in mssm, the Formula class has been extensively documented, including a list of code examples. Thus, to learn more about the Formula class you can rely on the help function as follows:

help(Formula)

Help on class Formula in module mssm.src.python.formula:

class Formula(builtins.object)

| Formula(

| lhs: lhs,

| terms: list[GammTerm],

| data: pd.DataFrame,

| series_id: str | None = None,

| codebook: dict | None = None,

| print_warn: bool = True,

| keep_cov: bool = False,

| find_nested: bool = True,

| file_paths: list[str] = [],

| file_loading_nc: int = 1,

| file_loading_kwargs: dict = {'header': 0, 'index_col': False}

| ) -> None

|

| The formula of a regression equation.

|

| **Note:** The class implements multiple ``get_*`` functions to access attributes stored in

| instance variables. The get functions always return a copy of the instance variable and the

| results are thus safe to manipulate.

|

| Examples::

|

| from mssm.models import *

| from mssmViz.sim import *

|

| from mssm.src.python.formula import build_penalties,build_model_matrix

|

| # Get some data and formula

| Binomdat = sim3(10000,0.1,family=Binomial(),seed=20)

| formula = Formula(lhs("y"),[i(),f(["x0"]),f(["x1"]),f(["x2"]),f(["x3"])],data=Binomdat)

|

| # Now with a tensor smooth

| formula = Formula(lhs("y"),[i(),f(["x0","x1"],te=True),f(["x2"]),f(["x3"])],data=Binomdat)

|

| # Now with a tensor smooth anova style

| formula = Formula(lhs("y"),[i(),f(["x0"]),f(["x1"]),f(["x0","x1"]),f(["x2"]),f(["x3"])],

| data=Binomdat)

|

|

| ######## Stream data from file and set up custom codebook #########

|

| file_paths = [

| f'https://raw.githubusercontent.com/JoKra1/mssmViz/main/data/GAMM/sim_dat_cond_{cond}.csv' for cond in ["a","b"]

| ]

|

| # Set up specific coding for factor 'cond'

| codebook = {'cond':{'a': 0, 'b': 1}}

|

| formula = Formula(lhs=lhs("y"), # The dependent variable - here y!

| terms=[i(), # The intercept, a

| l(["cond"]), # For cond='b'

| # to-way interaction between time and cond;

| # one smooth over time per cond level

| f(["time"],by="cond"),

| # to-way interaction between x and cond;

| # one smooth over x per cond level

| f(["x"],by="cond"),

| # three-way interaction

| f(["time","x"],by="cond"),

| # Random non-linear effect of time - one

| # smooth per level of factor sub

| fs(["time"],rf="sub")],

| data=None, # No data frame!

| file_paths=file_paths, # Just a list with paths to files.

| print_warn=False,

| codebook=codebook)

|

| # Alternative:

| formula = Formula(lhs=lhs("y"),

| terms=[i(),

| l(["cond"]),

| f(["time"],by="cond"),

| f(["x"],by="cond"),

| f(["time","x"],by="cond"),

| fs(["time"],rf="sub")],

| data=None,

| file_paths=file_paths,

| print_warn=False,

| keep_cov=True, # Keep encoded data structure in memory

| codebook=codebook)

|

| # preparing for ar1 model (with resets per time-series) and data type requirements

|

| dat = pd.read_csv(

| 'https://raw.githubusercontent.com/JoKra1/mssmViz/main/data/GAMM/sim_dat.csv'

| )

|

| # mssm requires that the data-type for variables used as factors is 'O'=object

| dat = dat.astype({'series': 'O',

| 'cond':'O',

| 'sub':'O',

| 'series':'O'})

|

| formula = Formula(lhs=lhs("y"),

| terms=[i(),

| l(["cond"]),

| f(["time"],by="cond"),

| f(["x"],by="cond"),

| f(["time","x"],by="cond")],

| data=dat,

| print_warn=False,

| series_id='series') # 'series' variable identifies individual time-series

|

| :param lhs: The lhs object defining the dependent variable.

| :type variable: lhs

| :param terms: A list of the terms which should be added to the model.

| See :py:mod:`mssm.src.python.terms` for info on which terms can be added.

| :type terms: [GammTerm]

| :param data: A pandas dataframe (with header!) of the data which should be used to estimate the

| model. The variable specified for ``lhs`` as well as all variables included for a ``term``

| in ``terms`` need to be present in the data, otherwise the call to Formula will throw an

| error.

| :type data: pd.DataFrame or None

| :param series_id: A string identifying the individual experimental units. Usually a unique

| trial identifier. Only necessary if approximate derivative computations are to be utilized

| for random smooth terms or if you need to estimate an 'ar1' model for multiple time-series

| data.

| :type series_id: str, optional

| :param codebook: Codebook - keys should correspond to factor variable names specified in terms.

| Values should again be a ``dict``, with keys for each of K levels of the factor and value

| corresponding to an integer in {0,K}.

| :type codebook: dict or None

| :param print_warn: Whether warnings should be printed. Useful when fitting models from terminal.

| Defaults to True.

| :type print_warn: bool,optional

| :param keep_cov: Whether or not the internal encoding structure of all predictor variables

| should be created when forming :math:`\mathbf{X}^T\mathbf{X}` iteratively instead of

| forming :math:`\mathbf{X}` directly. Can speed up estimation but increases memory

| footprint. Defaults to True.

| :type keep_cov: bool,optional

| :param find_nested: Whether or not to check for nested smooth terms. This only has an effect if

| you include at least one smooth term with more than two variables. Additionally, this check

| is often not necessary if you correctly use the ``te`` key-word of smooth terms and ensure

| that the marginals used to construct ti smooth terms have far fewer basis functions than

| the "main effect" univariate smooths. Thus, if you know what you're doing and you're

| working with large models, you might want to disable this (i.e., set to False) because this

| check can get quite expensive for larger models. Defaults to True.

| :type find_nested: bool,optional

| :param file_paths: A list of paths to .csv files from which :math:`\mathbf{X}^T\mathbf{X}`

| and :math:`\mathbf{X}^T\mathbf{y}` should be created iteratively. Setting this to a

| non-empty list will prevent fitting X as a whole. ``data`` should then be set to ``None``.

| Defaults to an empty list.

| :type file_paths: [str],optional

| :param file_loading_nc: How many cores to use to a) accumulate :math:`\mathbf{X}` in parallel

| (if ``data`` is not ``None`` and ``file_paths`` is an empty list) or b) to accumulate

| :math:`\mathbf{X}^T\mathbf{X}` and :math:`\mathbf{X}^T\mathbf{y}`

| (and :math:`\mathbf{\eta}` during estimation) (if ``data`` is ``None`` and ``file_paths``

| is a non-empty list). For case b, this should really be set to the maimum number of cores

| available. For a this only really speeds up accumulating :math:`\mathbf{X}` if

| :math:`\mathbf{X}` has many many columns and/or rows. Defaults to 1.

| :type file_loading_nc: int,optional

| :param file_loading_kwargs: Any key-word arguments to pass to pandas.read_csv when

| :math:`\mathbf{X}^T\mathbf{X}` and :math:`\mathbf{X}^T\mathbf{y}` should be created

| iteratively (if ``data`` is ``None`` and ``file_paths`` is a non-empty list).

| Defaults to ``{"header":0,"index_col":False}``.

| :type file_loading_kwargs: dict,optional

| :ivar lhs lhs: The left-hand side object of the regression formula passed to the constructor.

| Initialized at construction.

| :ivar [GammTerm] terms: The list of terms passed to the constructor. Initialized at

| construction.

| :ivar pd.DataFrame data: The dataframe passed to the constructor. Initialized at

| construction.

| :ivar [int] coef_per_term: A list containing the number of coefficients corresponding to each

| term included in ``terms``. Initialized at construction.

| :ivar [str] coef_names: A list containing a named identifier (e.g., "Intercept") for each

| coefficient estimated by the model. Initialized at construction.

| :ivar int n_coef: The number of coefficients estimated by the model in total. Initialized at

| construction.

| :ivar int unpenalized_coef: The number of un-penalized coefficients estimated by the model.

| Initialized at construction.

| :ivar np.ndarray or None y_flat: An array, containing all values on the dependent variable

| (i.e., specified by ``lhs.variable``) in order of the data-frame passed to ``data``. This

| variable will be initialized at construction but only if ``file_paths=None``, i.e., in case

| :math:`\mathbf{X}^T\mathbf{X}` and :math:`\mathbf{X}^T\mathbf{y}` are **not** created

| iteratively.

| :ivar np.ndarray or None cov_flat: An array, containing all (encoded, in case of categorical

| predictors) values on each predictor (each columns of ``cov_flat`` corresponds to a

| different predictor) variable included in any of the ``terms`` in order of the data-frame

| passed to ``data``. This variable will be initialized at construction but only if

| ``file_paths=None``, i.e., in case :math:`\mathbf{X}^T\mathbf{X}` and

| :math:`\mathbf{X}^T\mathbf{y}` are **not** created iteratively.

| :ivar np.ndarray or None NOT_NA_flat: An array, containing an indication (as bool) for each

| value on the dependent variable (i.e., specified by ``lhs.variable``) whether the

| corresponding value is not a number ("NA") or not. In order of the data-frame passed to

| ``data``. This variable will be initialized at construction but only if ``file_paths=None``,

| i.e., in case :math:`\mathbf{X}^T\mathbf{X}` and :math:`\mathbf{X}^T\mathbf{y}` are

| **not** created iteratively.

|

| Methods defined here:

|

| __init__(

| self,

| lhs: lhs,

| terms: list[GammTerm],

| data: pd.DataFrame,

| series_id: str | None = None,

| codebook: dict | None = None,

| print_warn: bool = True,

| keep_cov: bool = False,

| find_nested: bool = True,

| file_paths: list[str] = [],

| file_loading_nc: int = 1,

| file_loading_kwargs: dict = {'header': 0, 'index_col': False}

| ) -> None

| Initialize self. See help(type(self)) for accurate signature.

|

| encode_data(self, data: pd.DataFrame, prediction: bool = False) -> tuple[np.ndarray | None, np.ndarray, np.ndarray | None, list[np.ndarray] | None, list[np.ndarray] | None, list[np.ndarray] | None, np.ndarray | None]

| Encodes ``data``, which needs to be a ``pd.DataFrame`` and by default

| (if ``prediction==False``) builds an index of which rows in ``data`` are NA in the column

| of the dependent variable described by ``self.lhs``.

|

| :param data: The data to encode.

| :type data: pd.DataFrame

| :param prediction: Whether or not a NA index and a column for the dependent variable should

| be generated.

| :type prediction: bool, optional

| :return: A tuple with 7 (optional) entries: the dependent variable described by

| ``self.lhs``, the encoded predictor variables as a (N,k) array (number of rows matches

| the number of rows of the first entry returned, the number of columns matches the

| number of k variables present in the formula), an indication for each row whether

| the dependent variable described by ``self.lhs`` is NA, like the first entry but split

| into a list of lists by ``self.series_id``, like the second entry but split into a

| list of lists by ``self.series_id``, ike the third entry but split into a list of lists

| by ``self.series_id``, start and end points for the splits used to split the previous

| three elements (identifying the start and end point of every level of

| ``self.series_id``).

| :rtype: (np.ndarray|None, np.ndarray, np.ndarray|None, list[np.ndarray]|None,

| list[np.ndarray]|None, list[np.ndarray]|None, np.ndarray|None)

|

| get_coding_factors(self) -> dict

| Get a copy of the factor coding dictionary. Keys are factor variables in the data,

| values are dictionaries, where the keys correspond to the encoded levels (int) of the factor

| and the values to their levels (str).

|

| get_data(self) -> pd.DataFrame

| Get a copy of the ``data`` specified for this formula.

|

| get_depvar(self) -> np.ndarray

| Get a copy of the encoded dependent variable (defined via ``self.lhs``).

|

| get_factor_codings(self) -> dict

| Get a copy of the factor coding dictionary. Keys are factor variables in the data,

| values are dictionaries, where the keys correspond to the levels (str) of the factor and

| the values to their encoded levels (int).

|

| get_factor_levels(self) -> dict

| Get a copy of the factor levels dictionary. Keys are factor variables in the data,

| values are np.arrays holding the unique levels (as str) of the corresponding factor.

|

| get_has_intercept(self) -> bool

| Does this formula include an intercept or not.

|

| get_ir_smooth_term_idx(self) -> list[int]

| Get a copy of the list of indices that identify impulse response terms in

| ``self.terms``.

|

| get_lhs(self) -> lhs

| Get a copy of the ``lhs`` specified for this formula.

|

| get_linear_term_idx(self) -> list[int]

| Get a copy of the list of indices that identify linear terms in ``self.terms``.

|

| get_n_coef(self) -> int

| Get the number of coefficients that are implied by the formula.

|

| get_notNA(self) -> np.ndarray

| Get a copy of the encoded 'not a NA' vector for the dependent variable (defined via

| ``self.lhs``).

|

| get_random_term_idx(self) -> list[int]

| Get a copy of the list of indices that identify random terms in ``self.terms``.

|

| get_smooth_term_idx(self) -> list[int]

| Get a copy of the list of indices that identify smooth terms in ``self.terms``.

|

| get_subgroup_variables(self) -> list

| Returns a copy of sub-group variables for factor smooths.

|

| get_term_names(self) -> list[str]

| Returns a copy of the list with the names of the terms specified for this formula.

|

| get_terms(self) -> list[GammTerm]

| Get a copy of the ``terms`` specified for this formula.

|

| get_var_map(self) -> dict

| Get a copy of the var map dictionary. Keys are variables in the data, values their

| column index in the encoded predictor matrix returned by ``self.encode_data``.

|

| get_var_maxs(self) -> dict

| Get a copy of the var maxs dictionary. Keys are variables in the data, values are either

| the maximum value the variable takes on in ``self.data`` for continuous variables or

| ``None`` for categorical variables.

|

| get_var_mins(self) -> dict

| Get a copy of the var mins dictionary. Keys are variables in the data, values are either

| the minimum value the variable takes on in ``self.data`` for continuous variables or

| ``None`` for categorical variables.

|

| get_var_mins_maxs(self) -> tuple[dict, dict]

| Get a tuple containing copies of both the mins and maxs directory. See

| ``self.get_var_mins`` and ``self.get_var_maxs``.

|

| get_var_types(self) -> dict

| Get a copy of the var types dictionary. Keys are variables in the data, values are

| either ``VarType.NUMERIC`` for continuous variables or ``VarType.FACTOR`` for categorical

| variables.

|

| has_ir_terms(self) -> bool

| Does this formula include impulse response terms or not.

|

| ----------------------------------------------------------------------

| Data descriptors defined here:

|

| __dict__

| dictionary for instance variables

|

| __weakref__

| list of weak references to the object

Form this output, we learn that the Formula has three non-optional arguments: lhs, terms, and data. We also learn the expected types for all these arguments. lhs is another mssm class, used to specify the particular response variable associated with a Formula. You can again use the help function, to learn more:

help(lhs)

Help on class lhs in module mssm.src.python.formula:

class lhs(builtins.object)

| lhs(variable: str, f: Callable = None) -> None

|

| The Left-hand side of a regression equation.

|

| See the :class:`Formula` class for examples.

|

| :param variable: The name of the dependent/response variable in the dataframe passed to a

| :class:`Formula`. Can point to continuous and categorical variables. For

| :class:`mssm..models.GSMM` models, the variable can also be set to any placeholder

| variable in the data, since not every :class:`Formula` will be associated with a

| particular response variable.

| :type variable: str

| :param f: A function that will be applied to the ``variable`` before fitting. For example:

| np.log(). By default no function is applied to the ``variable``.

| :type f: Callable, optional

|

| Methods defined here:

|

| __init__(self, variable: str, f: Callable = None) -> None

| Initialize self. See help(type(self)) for accurate signature.

|

| ----------------------------------------------------------------------

| Data descriptors defined here:

|

| __dict__

| dictionary for instance variables

|

| __weakref__

| list of weak references to the object

Evidently, the lhs class can be used to specify the particular response variable we want to model (at least for GAMMs and GAMMLSS).

The next argument we have to specify is terms. The output from help(Formula) tells us that this is supposed to be a list of GammTerm objects. The GammTerm class is implemented by all terms available in mssm. mssm supports intercept terms i, linear terms l (see also li), smooth terms (f), impulse response smooth terms (irf), random smooth terms (fs), random intercepts (ri), and random slops (rs). You can again use the help function to learn about any of these:

help(f)

Help on class f in module mssm.src.python.terms:

class f(GammTerm)

| f(

| variables: list,

| by: str = None,

| by_cont: str = None,

| binary: tuple[str, str] | None = None,

| id: int | None = None,

| nk: int | list[int] = None,

| te: bool = False,

| rp: int = 0,

| constraint: ConstType = <ConstType.QR: 2>,

| identifiable: bool = True,

| scale_te: bool = False,

| basis: Callable = <function B_spline_basis at 0x7f9d5781f110>,

| basis_kwargs: dict = {},

| is_penalized: bool = True,

| penalize_null: bool = False,

| penalty: list[Penalty] | None = None,

| pen_kwargs: list[dict] | None = None

| ) -> None

|

| A univariate or tensor interaction smooth term. If ``variables`` only contains a

| single variable :math:`x`, this term will represent a univariate :math:`f(x)` in a model:

|

| .. math::

|

| \mu_i = a + f(x_i)

|

| For example, the model below in ``mgcv``:

|

| ::

|

| bam(y ~ s(x,k=10) + s(z,k=20))

|

| would be expressed as follows in ``mssm``:

|

| ::

|

| GAMM(Formula(lhs("y"),[i(),f(["x"],nk=9),f(["z"],nk=19)]),Gaussian())

|

| If ``variables`` contains two variables :math:`x` and :math:`z`, then this term will either

| represent the tensor interaction :math:`f(x,z)` in model:

|

| .. math::

|

| \mu_i = a + f(x_i) + f(z_i) + f(x_i,z_i)

|

| or in model:

|

| .. math::

|

| \mu_i = a + f(x_i,z_i)

|

| The first behavior is achieved by setting ``te=False``. In that case it is necessary

| to add 'main effect' ``f`` terms for :math:`x` and :math:`y`. In other words, the behavior then

| mimicks the ``ti()`` term available in ``mgcv`` (Wood, 2017). If ``te=True``, the term instead

| behaves like a ``te()`` term in ``mgcv``, so no separate smooth effects for the main effects

| need to be included.

|

| For example, the model below in ``mgcv``:

|

| ::

|

| bam(y ~ te(x,z,k=10))

|

| would be expressed as follows in ``mssm``:

|

| ::

|

| GAMM(Formula(lhs("y"),[i(),f(["x","z"],nk=9,te=True)]),Gaussian())

|

| In addition, the model below in ``mgcv``:

|

| ::

|

| bam(y ~ s(x,k=10) + s(z,k=20) + ti(x,z,k=10))

|

| would be expressed as follows in ``mssm``:

|

| ::

|

| GAMM(Formula(lhs("y"),[i(),f(["x"],nk=9),f(["z"],nk=19),f(["x","z"],nk=9,te=False)]),

| Gaussian())

|

| By default a B-spline basis is used with ``nk=9`` basis functions (after removing

| identifiability constrains). This is equivalent to ``mgcv``'s default behavior of using 10

| basis functions (before removing identifiability constrains). In case ``variables`` contains

| more then one variable ``nk`` can either bet set to a single value or to a list containing the

| number of basis functions that should be used to setup the spline matrix for every variable.

| The former implies that the same number of coefficients should be used for all variables.

| Keyword arguments that change the computation of the spline basis can be passed along via a

| dictionary to the ``basis_kwargs`` argument. Importantly, if multiple variables are present and

| a list is passed to ``nk``, a list of dictionaries with keyword arguments of the same length

| needs to be passed to ``basis_kwargs`` as well.

|

| Multiple penalties can be placed on every term by adding ``Penalty`` to the ``penalties``

| argument. In case ``variables`` contains multiple variables a separate tensor penalty (see Wood,

| 2017) will be created for every penalty included in ``penalties``. Again, key-word arguments

| that alter the behavior of the penalty creation need to be passed as dictionaries to

| ``pen_kwargs`` for every penalty included in ``penalties``.

| By default, a univariate term is penalized with a difference penalty of order 2 (Eilers & Marx,

| 2010).

|

| References:

| - Eilers, P., & Marx, B. (2010). Splines, knots, and penalties. https://doi.org/10.1002/WICS.125

| - Marra, G., & Wood, S. N. (2011). Practical variable selection for generalized additive models. Computational Statistics & Data Analysis, 55(7), 2372–2387. https://doi.org/10.1016/j.csda.2011.02.004

| - Wood, S. N. (2017). Generalized Additive Models: An Introduction with R, Second Edition (2nd ed.).

|

| :param variables: A list of the variables (strings) of which the term is a function. Need to

| exist in ``data`` passed to ``Formula``. Need to be continuous.

| :type variables: list[str]

| :param by: A string corresponding to a factor in ``data`` passed to ``Formula``. Separate

| f(``variables``) (and smoothness penalties) will be estimated per level of ``by``.

| :type by: str, optional

| :param by_cont: A string corresponding to a numerical variable in ``data`` passed to

| ``Formula``. The model matrix for the estimated smooth term f(``variables``) will be

| multiplied by the column of this variable. Can be used to estimate 'varying coefficient'

| models but also to set up binary smooths or to only estimate a smooth term for specific

| levels of a factor (i.e., what is possible for ordered factors in R & mgcv).

| :type by_cont: str, optional

| :param binary: A list containing two strings. The first string corresponds to a factor in

| ``data`` passed to ``Formula``. A separate f(``variables``) will be estimated for the level

| of this factor corresponding to the second string.

| :type binary: [str,str], optional

| :param id: Different smooth functions with the same id share their :math:`\lambda` values.

| Effect differs when also specifying a ``by`` variable: In that case, if ``id`` is set to any

| integer the penalties placed on the separate f(``variables``) will share a single smoothness

| penalty and other smooth terms will ignore this term's particular id.

| :type id: int|None, optional

| :param nk: Number of basis functions to use. Even if ``identifiable`` is true, this number will

| reflect the final number of basis functions for this term (i.e., mssm acts like you would

| have asked for 10 basis functions if ``nk=9`` and identifiable=True; the default).

| :type nk: int or list[int], optional

| :param te: For tensor interaction terms only. If set to false, the term mimics the behavior of

| ``ti()`` in mgcv (Wood, 2017). Otherwise, the term behaves like a ``te()`` term in mgcv -

| i.e., the marginal basis functions are not removed from the interaction.

| :type te: bool, optional

| :param rp: Whether or not to re-parameterize the term. Currently the Demmler & Reinsch

| parameterization is supported for univariate smooth terms (``rp=1``) and the 'natural'

| parameterization discussed by Wood (2006) for tensor smooth terms (``rp=2``). **Important**:

| when relying on the ``qEFS`` update to estimate smoothing penalty parameters, performance

| drops drastically for tensor smooth terms when **not** relying on the parameterization by

| Wood (2006). Hence, it is reccomended that you set ``rp=2`` for tensor smooths when relying

| on this update. Defaults to 0, meaning no re-parameterization.

| :type rp: int, optional

| :param constraint: What kind of identifiability constraints should be absorbed by the terms (if

| they are to be identifiable). Either QR-based constraints (default, well-behaved), by means

| of column-dropping (no infill, not so well-behaved), or by means of difference re-coding

| (little infill, not so well behaved either).

| :type constraint: mssm.src.constraints.ConstType, optional

| :param identifiable: Whether or not the constant should be removed from the space of functions

| this term can fit. Achieved by enforcing that :math:`\mathbf{1}^T \mathbf{X} = 0`

| (:math:`\mathbf{X}` here is the spline matrix computed for the observed data; see Wood,

| 2017 for details). Necessary in most cases to keep the model identifiable.

| :type identifiable: bool, optional

| :param scale_te: Whether or not the penalty matrices of marginal smooths should be scaled

| by their largest eigenvalue. This can improve numerical stability and is thus reccomended

| when relying on the ``qEFS`` update to estimate smoothing penalty parameters.

| Set to False by default.

| :type scale_te: bool, optional

| :param basis: The basis functions to use to construct the spline matrix. By default a B-spline

| basis (Eilers & Marx, 2010) implemented in :func:`mssm.src.smooths.B_spline_basis`.

| :type basis: Callable, optional

| :param basis_kwargs: A list containing one or multiple dictionaries specifying how the basis

| should be computed. Consult the docstring of the function computing the basis you want. For

| the default B-spline basis for example see the :func:`mss.src.smooths.B_spline_basis`

| function. The default arguments set by any basis function, should work for most cases

| though.

| :type basis_kwargs: dict, optional

| :param is_penalized: Should the term be left unpenalized or not. There are rarely good reasons

| to set this to False.

| :type is_penalized: bool, optional

| :param penalize_null: Should a separate Null-space penalty (Marra & Wood, 2011) be placed on

| the term. By default, the term here will leave a linear f(`variables`) un-penalized! Thus,

| there is no option for the penalty to achieve f(`variables`) = 0 even if that would be

| supported by the data. Adding a Null-space penalty provides the penalty with that power.

| This can be used for model selection instead of Hypothesis testing and is the preferred

| way in ``mssm`` (see Marra & Wood, 2011 for details).

| :type penalize_null: bool, optional

| :param penalty: A list of penalty types to be placed on the term.

| :type penalty: list[Penalty], optional

| :param pen_kwargs: A list containing one or multiple dictionaries specifying how the penalty

| should be created. Consult the docstring of the :func:`Penalty.constructor` method of the

| specific :class:`Penalty` you want to use for details.

| :type pen_kwargs: list[dict], optional

|

| Method resolution order:

| f

| GammTerm

| builtins.object

|

| Methods defined here:

|

| __init__(

| self,

| variables: list,

| by: str = None,

| by_cont: str = None,

| binary: tuple[str, str] | None = None,

| id: int | None = None,

| nk: int | list[int] = None,

| te: bool = False,

| rp: int = 0,

| constraint: ConstType = <ConstType.QR: 2>,

| identifiable: bool = True,

| scale_te: bool = False,

| basis: Callable = <function B_spline_basis at 0x7f9d5781f110>,

| basis_kwargs: dict = {},

| is_penalized: bool = True,

| penalize_null: bool = False,

| penalty: list[Penalty] | None = None,

| pen_kwargs: list[dict] | None = None

| ) -> None

| Initialize self. See help(type(self)) for accurate signature.

|

| absorb_repara(self, rpidx, X, cov, min_c, max_c)

| Computes all terms necessary to absorb a re-parameterization into the term and penalty

| matrix.

|

| References:

| - Wood, S. N. (2006). Low‐Rank Scale‐Invariant Tensor Product Smooths for Generalized Additive Mixed Models. Biometrics, 62(4), 1025–1036. https://doi.org/10.1111/j.1541-0420.2006.00574.x

| - Wood, S. N. (2017). Generalized Additive Models: An Introduction with R, Second Edition (2nd ed.).

|

| :param rpidx: Index to specific reparam. obejct. There must be a 1 to 1 relationship between

| reparam. objects and the number of marginals required by this smooth (i.e., the number

| of variables).

| :type rpidx: int

| :param X: Design matrix associated with this term.

| :type X: scipy.sparse.csc_array

| :param cov: The covariate this term is a function of as a flattened numpy array.

| :type cov: np.ndarray

| :param min_c: The minimum value of the covariate this term is a function of as a float.

| :type min_c: float

| :param max_c: The maximum value of the covariate this term is a function of as a float.

| :type max_c: float

| :raises ValueError: If this method is called with ``rpidx`` exceeding the number of this

| term's RP objects (i.e., when ``rpidx > (len(self.RP) - 1)``) or if ``self.rp`` is

| equal to a value for which no reparameterisation is implemented.

|

| build_matrix(

| self,

| ci: int,

| ti: int,

| var_map: dict,

| var_mins: dict,

| var_maxs: dict,

| factor_levels: dict,

| ridx: list[int],

| cov_flat: np.ndarray,

| use_only: list[int],

| tol: int = 0

| ) -> tuple[list[float], list[int], list[int], int]

| Builds the design/term/model matrix for this smooth term.

|

| References:

| - Wood, S. N. (2017). Generalized Additive Models: An Introduction with R, Second Edition (2nd ed.).

|

| :param ci: Current column index.

| :type ci: int

| :param ti: Index corresponding to the position the current term (i.e., self) takes on in

| the list of terms of the Formula.

| :type ti: int

| :param var_map: Var map dictionary. Keys are variables in the data, values their column

| index in the encoded predictor matrix.

| :type var_map: dict

| :param var_mins: Var mins dictionary. Keys are variables in the data, values are either the

| minimum value the variable takes on for continuous variables or ``None`` for

| categorical variables.

| :type var_mins: dict

| :param var_maxs: Var maxs dictionary. Keys are variables in the data, values are either the

| maximum value the variable takes on in for continuous variables or ``None`` for

| categorical variables.

| :type var_maxs: dict

| :param factor_levels: Factor levels dictionary. Keys are factor variables in the data,

| values are np.arrays holding the unique levels (as str) of the corresponding factor.

| :type factor_levels: dict

| :param ridx: Array of non NAN rows in the data.

| :type ridx: np.ndarray

| :param cov_flat: An array, containing all (encoded, in case of categorical predictors)

| values on each predictor (each columns of ``cov_flat`` corresponds to a different

| predictor) variable included in any of the terms in order of the data-frame passed to

| the Formula.

| :type cov_flat: np.ndarray

| :param use_only: A list holding term indices for which the matrix should be formed. For

| terms not included in this list a zero matrix will be returned. Can be set to

| ``None`` so that no terms are excluded.

| :type use_only: [int]

| :param tol: A tolerance that can be used to prune the term matrix from values close to zero

| rather than absolutely zero. Defaults to strictly zero.

| :type tol: int, optional

| :return: matrix data, matrix row indices, matrix column indices, added columns

| :rtype: tuple[list[float],list[int],list[int],int]

|

| build_penalty(

| self,

| ti: int,

| penalties: list[LambdaTerm],

| cur_pen_idx: int,

| penid: int,

| factor_levels: dict,

| col_S: int

| ) -> tuple[list[LambdaTerm], int]

| Builds a penalty matrix associated with this smooth term and returns an updated

| ``penalties`` list including it.

|

| This method is implemented by most implementations of the :class:`GammTerm` class.

| Two arguments need to be returned: the updated ``penalties`` list including the new penalty

| implemented as a :class:`LambdaTerm` and the updated ``cur_pen_idx``.

| The latter simply needs to be incremented for every penalty added to ``penalties``.

|

| :param ti: Index corresponding to the position the current term (i.e., self) takes on in

| the list of terms of the Formula.

| :type ti: int

| :param penalties: List of previosly created penalties.

| :type penalties: [LambdaTerm]

| :param cur_pen_idx: Index of the last element in ``penalties``.

| :type cur_pen_idx: int

| :param penid: If a term is subjected to multipe penalties, then ``penid`` indexes which of

| those penalties is currently implemented. Otherwise can be set to zero.

| :type penid: int

| :param factor_levels: Factor levels dictionary. Keys are factor variables in the data,

| values are np.arrays holding the unique levels (as str) of the corresponding factor.

| :type factor_levels: dict

| :param n_coef: Number of coefficients associated with this term.

| :type n_coef: int

| :param col_S: Number of columns of the total penalty matrix.

| :type col_S: int

| :return: Updated ``penalties`` list including the new penalties implemented as a

| :class:`LambdaTerm` and the updated ``cur_pen_idx``

| :rtype: tuple[list[LambdaTerm],int]

|

| get_coef_info(self, factor_levels: dict) -> tuple[int, int, list[str]]

| Returns the total number of coefficients associated with this smooth term, the number of

| unpenalized coefficients associated with this smooth term, and a list with names for each

| of the coefficients associated with this smooth term.

|

| :param factor_levels: Factor levels dictionary. Keys are factor variables in the data,

| values are np.arrays holding the unique levels (as str) of the corresponding factor.

| :type factor_levels: dict

| :return: Number of coefficients associated with term, number of un-penalized coefficients

| associated with term, coef names

| :rtype: tuple[int,int,list[str]]

|

| ----------------------------------------------------------------------

| Data descriptors inherited from GammTerm:

|

| __dict__

| dictionary for instance variables

|

| __weakref__

| list of weak references to the object

Since these are a lot of terms, we provide an overview of popular formulas in mgcv and show how they can be represented in mssm. Together with the examples in the docstrings (accessible via the help function and here), those presented in the later sections of this tutorial, and the overview table below you should be able to get started in no time!

From mgcv to mssm - Conversion table

mcgv |

mssm |

|---|---|

|

|

|

|

|

|

|

|

|

|

Assuming that cond is a factor with two-levels (“a” and “b”), then setting up a binary smooth model in mgcv in R requires creating variable dat$is_a <- ifelse(as.character(dat$cond)=="a", 1, 0). . In mssm no extra variable needs to be created (although this is also possible: dat["is_a"] = (dat["cond"].values == "a").astype(int)) for such a simple model (see table below). For the models below we further assume that “sub” identifies a categorical/factor variable, that “cond_o” represents an ordered version of “cond” in R, and that “sub_cond” is the interaction of the factor variables “cond” and “sub”.

I.e., in python:

dat["is_a"] = (dat["cond"].values == "a").astype(int)

dat["is_b"] = (dat["cond"].values == "b").astype(int)

dat["sub_cond"] = dat["cond"].values.astype(str) + "_" + dat["sub"].values.astype(str)

mcgv |

mssm |

type |

|---|---|---|

|

|

Binary smooth model |

|

|

Ordered factor model |

|

|

Random intercept model |

|

|

Random intercept + slope model |

|

|

Random intercept + slope (with separate smoothing penalties per level of cond) model |

|

|

Random smooth model |

|

|

Random smooth per level of second factor model |

Notes:

The difference between the binary smooth model and the ordered factor model is subtle: The binary smooth

f(["x"],nk=9),f(["x"],binary=["cond","a"])includes an offset (thus theidentifiablekeyword is set to False in the alternative syntax:f(["x"],by_cont="is_a",nk=10,identifiable=False)), while the ordered factor smoothf(["x"],by_cont="is_b",identifiable=True)does not. Essentially, both are difference curves, but the binary smooth can take on a constant offset while the ordered factor smooth cannot. For this reason, the extra offset for level “b” of factor “cond” also has to be added back in (vial(["cond"])) for the ordered factor model.If “cond” and “cond_o” were a factor with more than two levels, then it would be necessary to add additional ordered factor smooths for the remaining levels (i.e.,

f(["x"],by_cont="is_c",identifiable=True),f(["x"],by_cont="is_d",identifiable=True), …) as well to replicate the result of the formulay ~ cond + s(x) + s(x,by=cond_o),data=datin mgcv.For the models for which alternative

mssmoptions are specified, the different options are equivalent. They just allow you to pick the syntax you prefer.

Factor Variable Coding

The Formula class will automatically dummy-code levels of categorical variables (alphabetically/numerically ascending): in the example here, the variable “cond” has two levels: “a” and “b”. Internally, “a” will be set to the reference level (0), while “b” will be set to level 1.

If the coding should be fixed to a specific order, a codebook can be provided as keyword argument to any Formula. A codebook is a dictionary containing dictionaries as values. The keys for the codebook need to refer to categorical variables in the data-frame. The value for each key is itself again a dictionary with a key for every level of the categorical variable specified as key, every level should then receive as value an integer from 0-k, where k is the number of levels of the categorical variable to which this dictionary belongs.

Not all variables have to be specified. For example, for fixing the ordering of “cond” but to still let the Formula figure out the coding for “sub” the following codebook could be used:

codebook = {'cond':{'a': 0, 'b': 1}}

You can always extract the final codebook used by a model by calling formula.get_factor_codings() - where formula was the Formula specified for the model of interest.

Important:

If only the products involving the model matrix are to be formed (see “Very big random effect structure + MANY observations” example in the advanced topic section), the default level ordering may not reflect an alphabetical order. To ensure that this is the case, specify the codebook in advance as described above.

We will now work through a bunch of these formulas, using Gaussian additive models as an example, so that you can familiarize yourself with the syntax of mssm.

Practicing with Formulas & Estimating Additive Models

A single smooth model

In this section, we will focus exclusively on Gaussian GAMMs (or AMMs) to become familiar with the different smooth & parametric terms that we can incorporate in these models. In brief, the assumption underlying a Gaussian GAMM is that each of our \(N\) observations \(y_i\) is a realizations from a random variable \(Y_i \sim N(\mu_i,\sigma)\). We further assume that all the \(Y_i\) are mutually independent! \(\mu_i\) - the mean of each RV distribution - is modeled as an additive combination of smooth functions and parametric terms (the “additive model part”). In contrast to \(\mu_i\) which can differ between \(Y_i\), \(\sigma\) is assumed to be shared by all RVs! For a more extensive introduction, we reccomed consulting the book by Simon Wood (2017, Generalized Additive Models: An Introduction with R).

We will now start with a simple model of the mean \(\mu_i\), incorporating only a single smooth of time! In a first step, we set up the formula. We already discussed the lhs and terms arguments above - the remaining mandatory argument data is fortunately self-explanatory:

# Build a simple Gaussian GAMM

# Y_i = a + f(time) + e with e ~ N(0,sigma)

formula = Formula(lhs=lhs("y"), # The dependent variable - here y!

terms=[i(), # The intercept, a

f(["time"])], # The f(time) term, by default parameterized with 9 basis functions (after absorbing one for identifiability)

data=dat)

/opt/hostedtoolcache/Python/3.14.2/x64/lib/python3.14/site-packages/mssm/src/python/formula.py:921: UserWarning: 3003 y values (9.32%) are NA.

warnings.warn(

At this point, you have created a Formula. Now you need to set up a model. As mentioned, we are looking for a Gaussian GAMM model. GAMMs in mssm are implemented via the GAMM class. The constructor of the GAMM class takes as argument a Formula and the Family (distribution) of the response variables. We want a Gaussian. Hence, to create the model object, we write:

# Initialize the GAMM as a Gaussian one!

model = GAMM(formula,Gaussian())

At this point, the model has yet to be estimated. But we can already extract some information about what the model is going to do during estimation:

# Some info about the size of this model:

print(f"Model will estimate {model.formulas[0].get_n_coef()} coefficients from {len(model.formulas[0].get_depvar()[model.formulas[0].get_notNA()])} datapoints!")

print("Terms to be estimated:")

model.print_parametric_terms()

model.print_smooth_terms()

Model will estimate 10 coefficients from 29217 datapoints!

Terms to be estimated:

Intercept

f(['time'])

Now, to actually fit the model you need to call the model’s fit method. By default, this will display a progress bar. During estimation, the progress bar will tell you the distance to convergence. Once converged it should print Converged!. Don’t worry - normally it will not require the default of 200 fitting iterations to get there! We have disabled the progress bar here, since it does not render nicely in the documentation.

# Fit the model

model.fit(progress_bar=False)

Now that the model has been fitted, we can get more information about the estimated coefficients/effects.

# We can print a summary of the smooth terms included in the model

# and the estimated degrees of freedom + optionally p-values corresponding to each:

model.print_smooth_terms(p_values=True)

f(['time']); edf: 8.723 f: 1494.827 P(F > f) = 0.000e+00 ***

Note: p < 0.001: ***, p < 0.01: **, p < 0.05: *, p < 0.1: . p-values are approximate!

The single smooth term has used approximately 8.72 basis functions (of the 9 it could use) and there is strong evidence that the smooth is significantly different from zero at least at some time-point.

# To get the total degrees of freedom used by the model we can access the ``edf`` argument:

round(model.edf,ndigits=3)

np.float64(9.723)

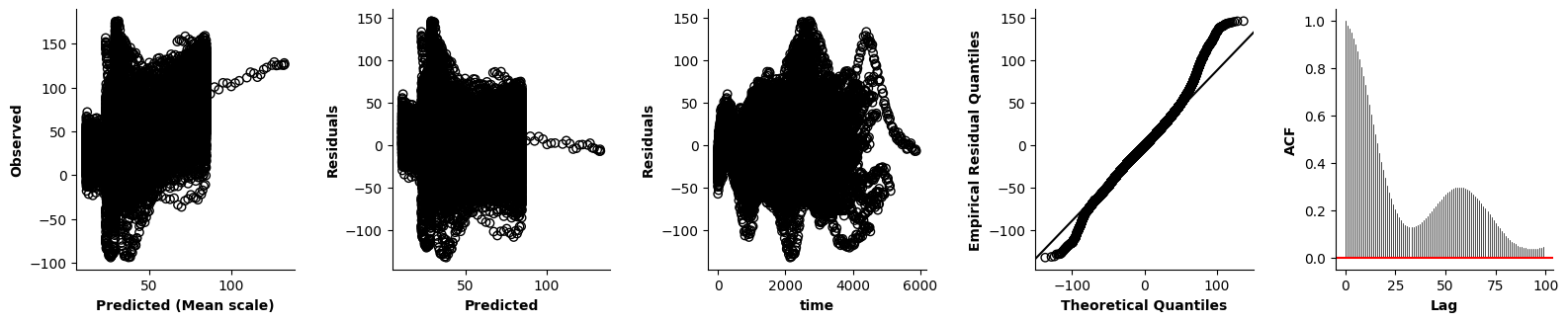

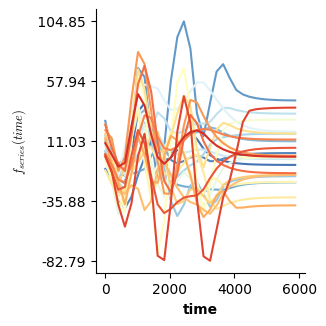

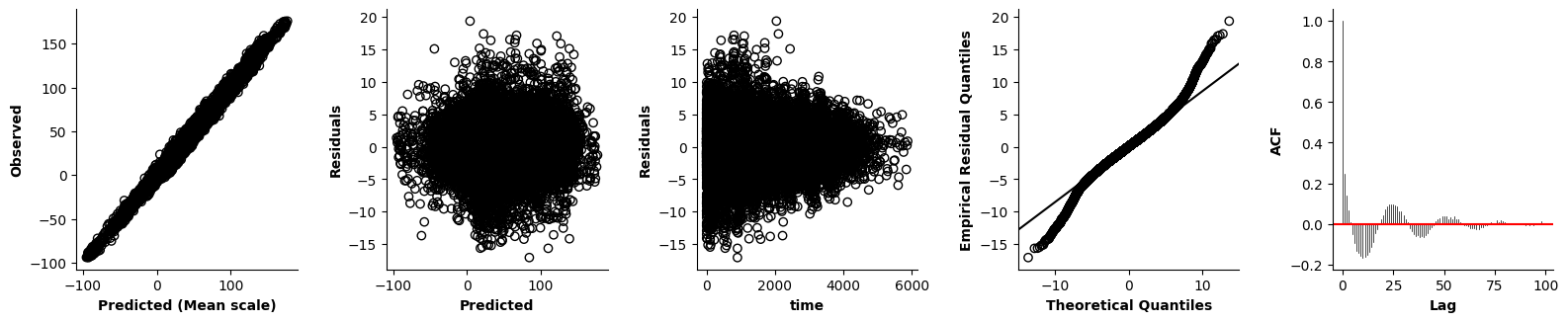

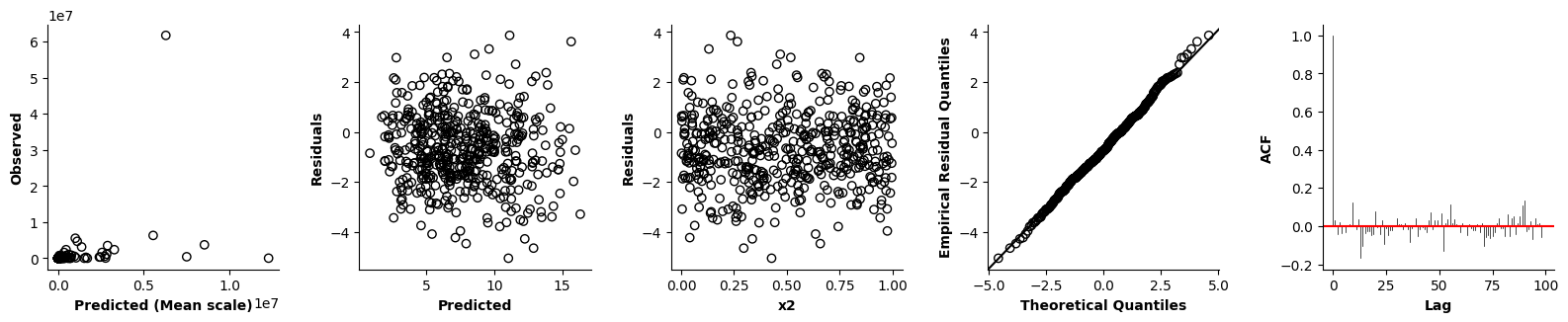

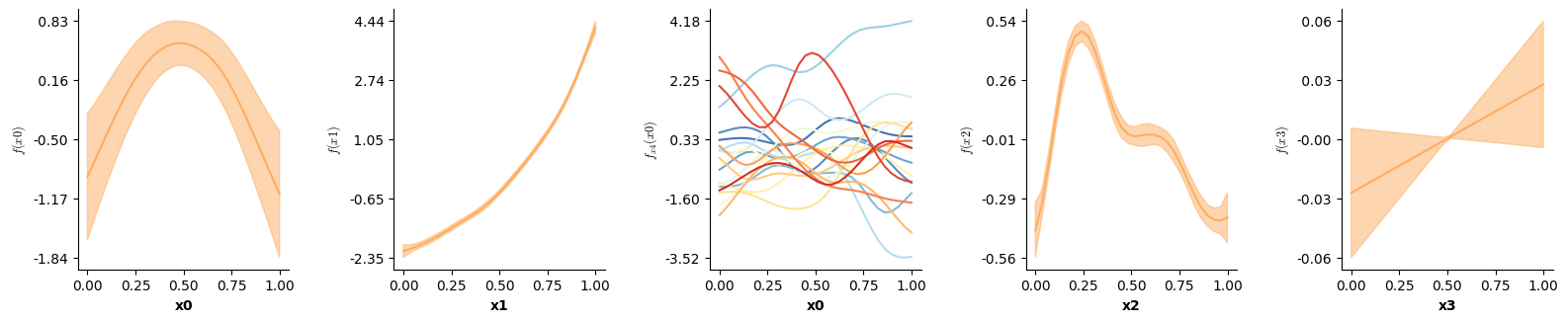

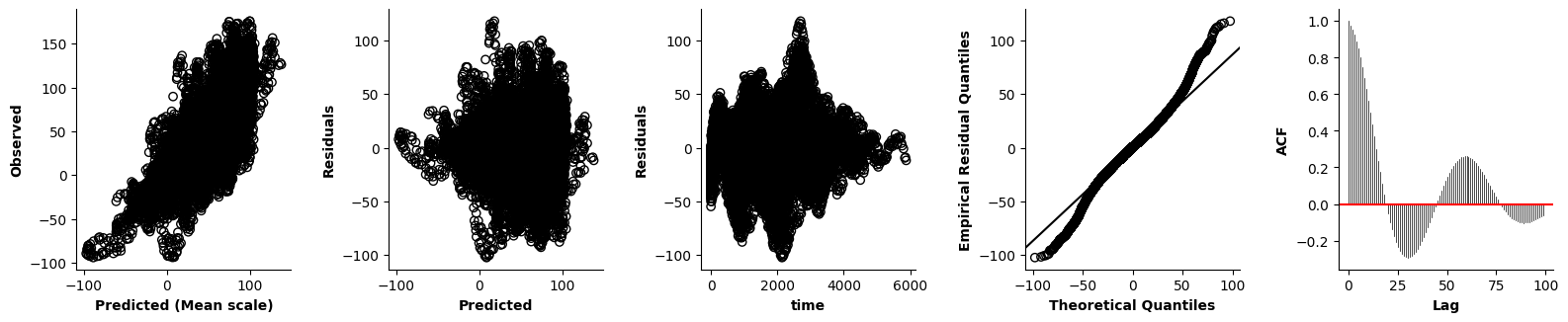

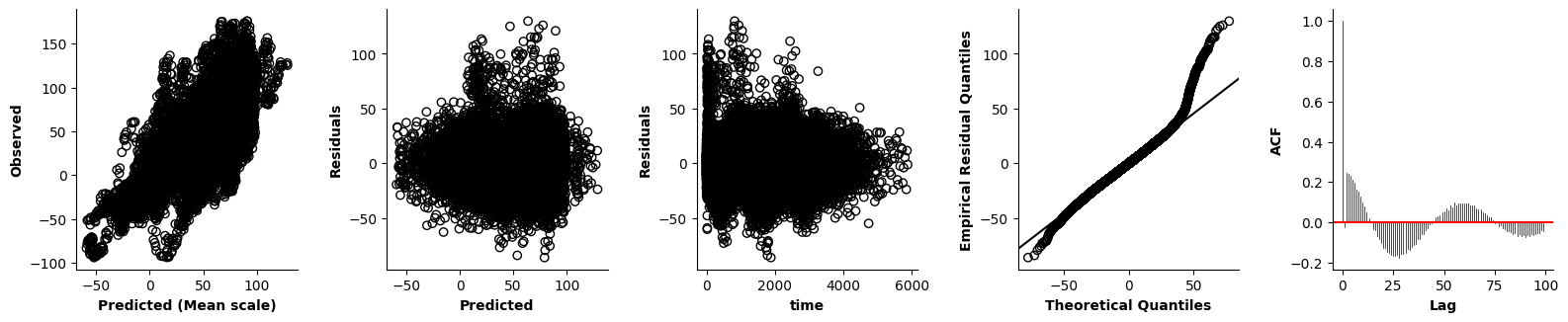

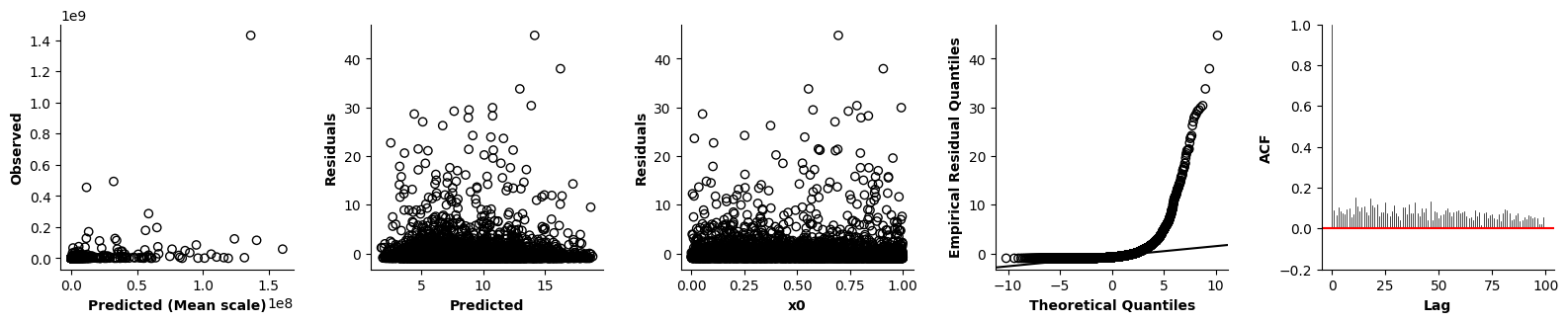

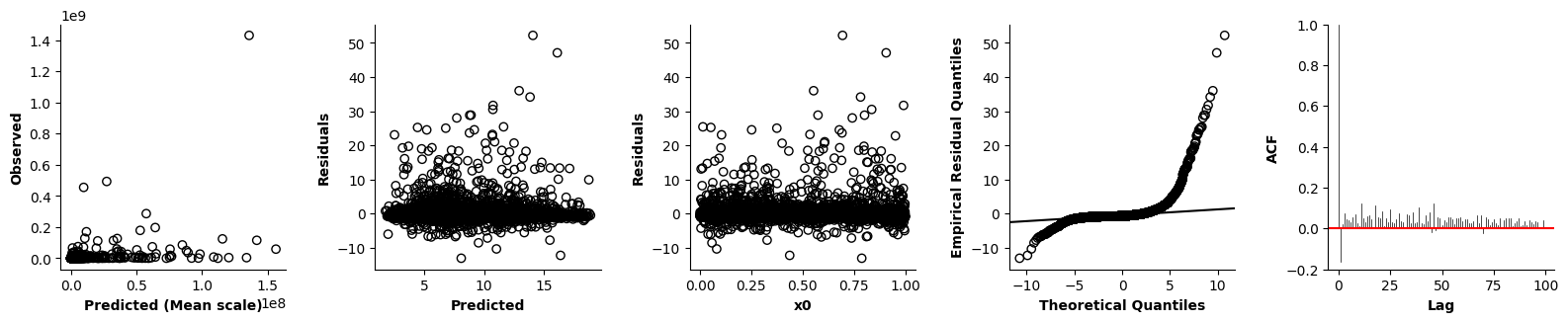

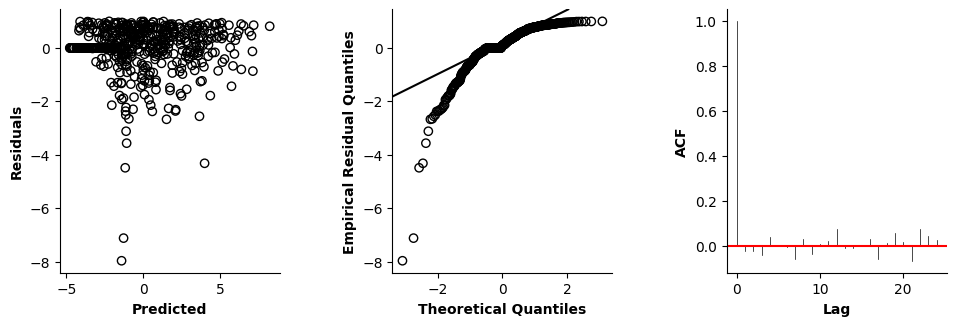

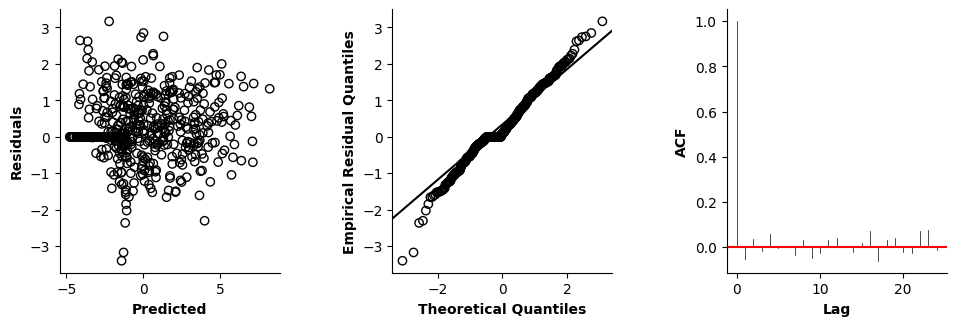

Validation of the assumptions sketched out earlier is absolutely crucial. For the additive model, the independence of \(Y_1\), \(Y_2\), … \(Y_N\) implies that the N-dimensional residual vector (the difference between the observed values \(y_i\) and the model predictions \(\mu_i\)) should look like what could be expected from drawing N independent samples from \(N(0,\sigma)\). This is best checked in residual plots as generated by the plot_val function available from mssmViz. Clearly, this model fails to meet the assumptions - we will get back to that later!!

fig = plt.figure(figsize=(5*single_size,single_size),layout='constrained')

axs = fig.subplots(1,5,gridspec_kw={"wspace":0.1})

plot_val(model,pred_viz=["time"],axs=axs,resid_type="Deviance")

plt.show()

# Of course mssm offers all information visualized in the plots above as attributes/via method calls, so that

# you can also make your own plots. Here some examples:

coef, sigma = model.get_pars() # Coef = weights for basis functions, sigma = **variance** of residuals!

pred = model.preds[0] # The model prediction for the entire data, here: a + f(Time)!

mu = model.mus[0] # The predicted mean - this will be identical to pred for the additive model, but this will not be the case for generalized additive models!

res = model.get_resid() # The residuals y_i - \mu_i

y = model.formulas[0].y_flat[model.formulas[0].NOT_NA_flat] # The dependent variable after NAs were removed

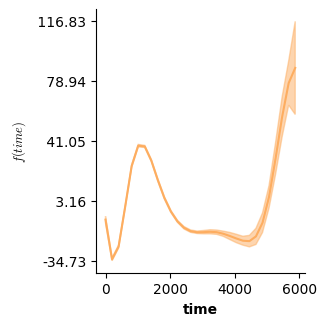

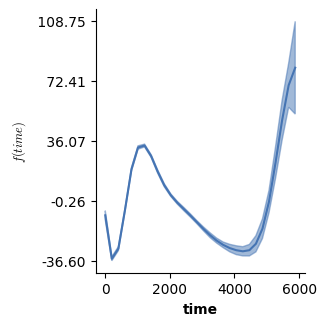

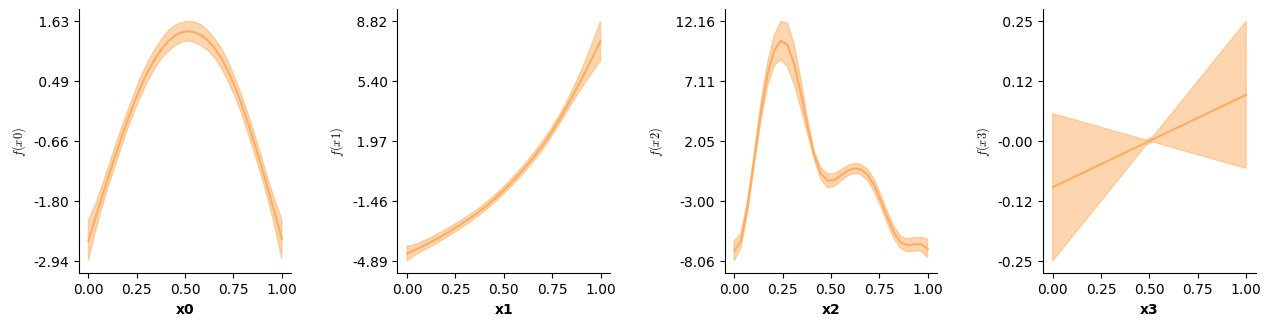

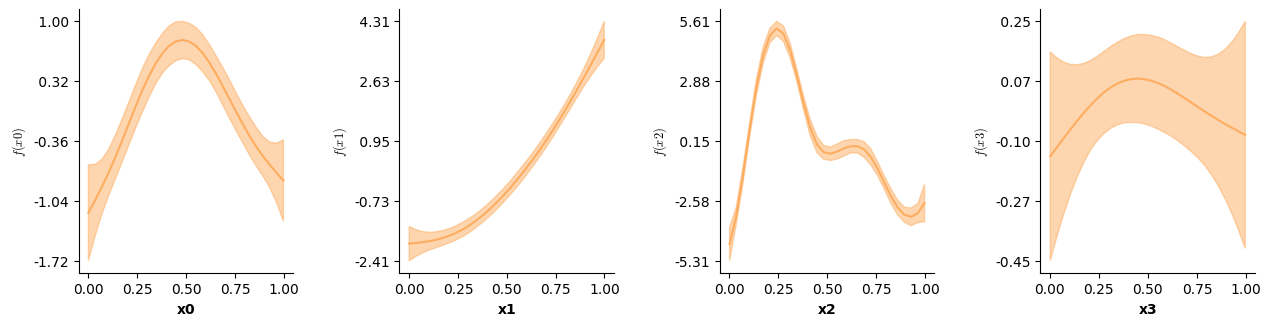

Apart from validation plots, mssmViz also has functions to visualize the model’s estimated smooth terms. Below, we print the estimated smooth of time.

# Plot partial prediction for a single smooth

plot(model,fig_size=(single_size,single_size))

A by-condition GAM

To get a by-factor model (s(Time,by=cond) in mgcv) mssm also provides a by keyword! As mentioned above, by default Formula dummy-codes factor variables in alphapetical order. Hence, for the cond variable “a” will be coded as 0, while “b” will be coded as 1.

# Y = a + b1*c1 + f0(time) + f1(time) + e

# with e ~ N(0,sigma) and c1 == 1 if cond == "b" else 0

# and f0() and f1() representing the smooths over time for

# the first (a) and second (b) level of cond respectively.

formula2 = Formula(lhs=lhs("y"), # The dependent variable - here y!

terms=[i(), # The intercept, a

l(["cond"]), # One coefficient per dummy-predictor necessary for factor cond - here b

f(["time"],by="cond")], # One f(time) term for every level of cond

data=dat)

/opt/hostedtoolcache/Python/3.14.2/x64/lib/python3.14/site-packages/mssm/src/python/formula.py:921: UserWarning: 3003 y values (9.32%) are NA.

warnings.warn(

# Fit the model

model2 = GAMM(formula2,Gaussian())

model2.fit(progress_bar=False)

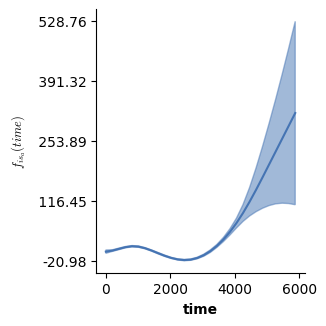

# Again, we print a summary of the edf. per term in the model.

# Note: separate smooths were estimated for each level of "cond" so two edf values are printed.

model2.print_smooth_terms()

f(['time'],by=cond): a; edf: 6.881

f(['time'],by=cond): b; edf: 8.714

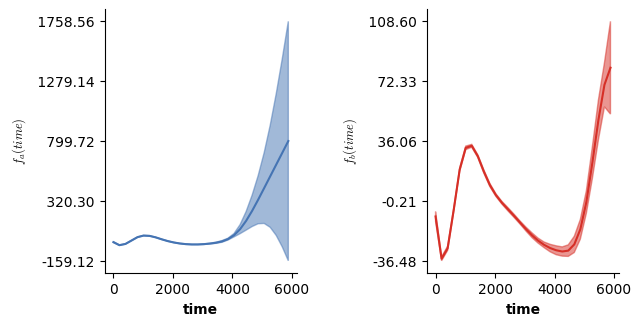

# Partial prediction (using only the f(time)) for all conditions

# Basically what plot(model) does in R in case model was obtained from mgcv

fig = plt.figure(figsize=(2*single_size,single_size),layout='constrained')

axs = fig.subplots(1,2,gridspec_kw={"wspace":0.1})

plot(model2,axs=axs)

plt.show()

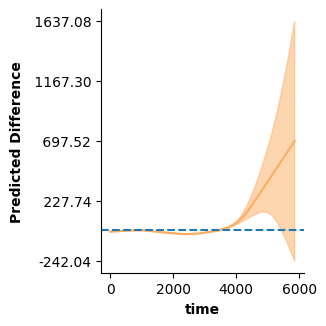

With mssmViz we can also look at the predicted difference in the mean between two conditions: plot_diff allows us to plot the predicted difference over time between conditions a and b. Note that plot_diff does by default take into account all terms included in the model, so the offset differences induced by a and b1 are taken into account!!

You can again use the help or print function, to learn more about the plot_diff function:

print(plot_diff.__doc__)

Plots the expected difference (and CI around this expected difference) between two sets of

predictions, evaluated for `pred_dat1` and `pred_dat2`.

This function works with all GAMM models, but only supports ``GAMMLSS`` and ``GSMM`` models when

setting ``response_scale=False``. The latter is by default set to True, which means that, in

contrast to ``plot``, the predicted difference is computed on the scale of the mean

(i.e., response-scale) by default.

This function is primarily designed to visualize the expected difference between two levels of a

categorical/factor variable. For example, consider the following model below, including a

separate smooth of "time" per level of the factor "cond". It is often of interest to visualize

*when* in time the two levels of "cond" differ from each other in their dependent variable.

For this, the difference curve over "time", essentially the smooth of "time" for the first level

subtracted from the smooth of "time" for the second level of factor "cond" (offset terms can

also be accounted for, check the `use` argument), can be visualized together with a CI

(Wood, 2017). This CI can provide insights into whether and *when* the two levels can be

expected to be different from each other. To visualize this difference curve as well as the

difference CI, this function can be used as follows::

# Define & estimate model

model = GAMM(Formula(lhs("y"),[i(), l(["cond"]), f(["time"],by="cond")],data=dat),

Gaussian())

model.fit()

# Create prediction data, differing only in the level of factor cond

time_pred = np.linspace(0,np.max(dat["time"]),30)

new_dat1 = pd.DataFrame({"cond":["a" for _ in range(len(time_pred))],

"time":time_pred})

new_dat2 = pd.DataFrame({"cond":["b" for _ in range(len(time_pred))],

"time":time_pred})

# Now visualize diff = (\alpha_a + f_a(time)) - (\alpha_b + f_b(time)) and the CI around

# diff

plot_diff(pred_dat1,pred_dat2,["time"],model)

This is only the most basic example to illustrate the usefulness of this function. Many other

options are possible. Consider for example the model below, which allows for

the expected time-course to vary smoothly as a function of additional covariate "x" - achieved

by inclusion of the tensor smooth term of "time" and "x". In addition, this

model allows for the shape of the tensor smooth to differ between the levels of factor "cond"::

model = GAMM(Formula(lhs("y"),[i(), l(["cond"]), f(["time","x"],by="cond",te=True)],

data=dat),Gaussian())

For such a model, multiple predicted differences might be of interest. One option would be to

look only at a single level of "cond" and to visualize the predicted difference

in the time-course for two different values of "x" (perhaps two quantiles). In that case,

`pred_dat1` and `pred_dat2` would have to be set up to differ only in the value of

"x" - they should be equivalent in terms of "time" and "cond" values.

Alternatively, it might be of interest to look at the predicted difference between the tensor

smooth surfaces for two levels of factor "cond". Rather than being interested in a difference

curve, this would mean we are interested in a difference *surface*. To achieve this,

`pred_dat1` and `pred_dat2` would again have to be set up to differ only in the value of

"cond" - they should be equivalent in terms of "time" and "x" values. In addition, it would be

necessary to specify `tvars=["time","x"]`. Note that, for such difference surfaces,

areas of the difference prediction for which the CI contains zero will again be visualized with

low opacity if the CI is to be visualized.

References:

- Wood, S. N. (2017). Generalized Additive Models: An Introduction with R, Second Edition (2nd ed.).

- Simpson, G. (2016). Simultaneous intervals for smooths revisited.

:param pred_dat1: A pandas DataFrame containing new data for which the prediction is to be

compared to the prediction obtained for `pred_dat2`. Importantly, all variables present in

the data used to fit the model also need to be present in this DataFrame. Additionally,

factor variables must only include levels also present in the data used to fit the model.

If you want to exclude a specific factor from the difference prediction (for example the

factor subject) don't include the terms that involve it in the ``use`` argument.

:type pred_dat1: pandas.DataFrame

:param pred_dat2: Like `pred_dat1` - ideally differing only in the level of a single factor

variable or the value of a single continuous variable.

:type pred_dat2: pandas.DataFrame

:param tvars: List of variables to be visualized - must contain one string for difference

predictions visualized as a function of a single variable, two for difference predictions

visualized as a function of two variables

:type tvars: [str]

:param model: The estimated GAMM or GAMMLSS model for which the visualizations are to be

obtained

:type model: GAMM or GAMMLSS

:param use: The indices corresponding to the terms that should be used to obtain the prediction

or ``None`` in which case all fixed effects will be used, defaults to None

:type use: [int]|None, optional

:param dist_par: The index corresponding to the parameter for which to make the prediction

(e.g., 0 = mean) - only necessary if a GAMMLSS or GSMM model is provided, defaults to 0

:type dist_par: int, optional

:param ci_alpha: The alpha level to use for the standard error calculation. Specifically,

1 - (``alpha``/2) will be used to determine the critical cut-off value according to a

N(0,1), defaults to 0.05

:type ci_alpha: float, optional

:param whole_interval: Whether or not to adjuste the point-wise CI to behave like whole-function

(based on Wood, 2017; section 6.10.2 and Simpson, 2016), defaults to False

:type whole_interval: bool, optional

:param n_ps: How many samples to draw from the posterior in case the point-wise CI is adjusted

to behave like a whole-function CI, defaults to 10000

:type n_ps: int, optional

:param seed: Can be used to provide a seed for the posterior sampling step in case the

point-wise CI is adjusted to behave like a whole-function CI, defaults to None

:type seed: int|None, optional

:param cmp: string corresponding to name for a matplotlib colormap, defaults to None in which

case it will be set to 'RdYlBu_r'.

:type cmp: str|None, optional

:param plot_exist: Whether or not an indication of the data distribution should be provided.

For difference predictions visualized as a function of a single variable this will simply

hide predictions outside of the data-limits. For difference predictions visualized as a

function of a two variables setting this to true will result in values outside of data

limits being hidden, defaults to False

:type plot_exist: bool, optional

:param response_scale: Whether or not predictions and CIs should be shown on the scale of the

model predictions (linear scale) or on the 'response-scale' i.e., the scale of the mean,

defaults to True

:type response_scale: bool, optional

:param ax: A ``matplotlib.axis.Axis`` on which the Figure should be drawn, defaults to None in

which case an axis will be created by the function and plot.show() will be called at the end

:type ax: matplotlib.axis.Axis, optional

:param fig_size: Tuple holding figure size, which will be used to determine the size of the

figures created if `ax=None`, defaults to (6/2.54,6/2.54)

:type fig_size: tuple[float,float], optional

:param ylim: Tuple holding y-limits (z-limits for 2d plots), defaults to None in which case

y_limits will be inferred from the predictions made

:type ylim: tuple[float,float]|None, optional

:param col: A float in [0,1]. Used to get a color for univariate predictions from the chosen

colormap, defaults to 0.7

:type col: float, optional

:param label: A label to add to the y axis for univariate predictions or to the color-bar for

tensor predictions, defaults to None

:type label: str|None, optional

:param title: A title to add to the plot defaults to None

:type title: str|None, optional

:param lim_dist: The floating point distance (on normalized scale, i.e., values have to be in

``[0,1]``) at which a point is considered too far away from training data. Setting this to

0 means we visualize only points for which there is trainings data, setting this to 1 means

visualizing everything. Defaults to 0.1

:type lim_dist: float, optional

:raises ValueError: If a visualization is requested for more than 2 variables

Thus, to visualize the predicted difference we need to set up two data-frames, one per level of “cond”. The plot_diff function then figures out the rest:

time_pred = [t for t in range(0,max(dat["time"]),20)]

new_dat1 = pd.DataFrame({"cond":["a" for _ in range(len(time_pred))],

"time":time_pred})

new_dat2 = pd.DataFrame({"cond":["b" for _ in range(len(time_pred))],

"time":time_pred})

# Note, by default `plot_diff` excludes predictor ranges for which one of the two conditions

# contains no values (time > 4000 only exists in cond b). This behavior can be changed with

# the `plot_exist` keyword.

fig = plt.figure(figsize=(single_size,single_size),layout='constrained')

ax = fig.subplots(1,1)

plot_diff(new_dat1,new_dat2,["time"],model2,ax=ax)

ax.axhline(0,linestyle='dashed')

plt.show()

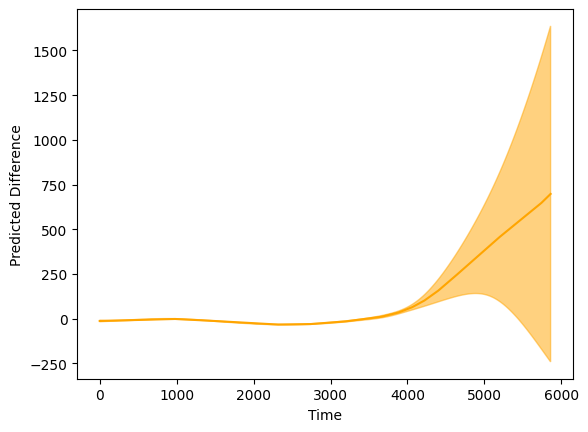

Alternatively, you can also extract the predicted difference yourself, using the model’s predict_diff method and then create your own plot:

pred_diff,b = model2.predict_diff(new_dat1,new_dat2,use_terms=None)

plt.fill([*new_dat1["time"],*np.flip(new_dat1["time"])],

[*(pred_diff + b),*np.flip(pred_diff - b)],alpha=0.5,color='orange')

plt.plot(new_dat1["time"],pred_diff,color='orange')

plt.xlabel("Time")

plt.ylabel("Predicted Difference")

Text(0, 0.5, 'Predicted Difference')

A binary smooth model

If the difference between conditions is of interest it might be more desirable to directly fit a difference curve for specific levels of the factor of interest, rather than plotting the predicted difference. This can be beneficial in case conditions differ in the values of the predictor over which the difference is to be evaluated, because confidence intervals will often be narrower.

We set up such a model below!

formula3 = Formula(lhs=lhs("y"), # The dependent variable - here y!

terms=[i(), # The intercept, a

f(["time"]), # One f(time) term - will correspond to the baseline level for condition: here let's choose b

f(["time"],binary=["cond","a"])], # Another f(time), this one models the difference over time from the other f(time) term for cond=a!

data=dat)

/opt/hostedtoolcache/Python/3.14.2/x64/lib/python3.14/site-packages/mssm/src/python/formula.py:921: UserWarning: 3003 y values (9.32%) are NA.

warnings.warn(

# Fit the model

model3 = GAMM(formula3,Gaussian())

model3.fit(progress_bar=False)

model3.print_smooth_terms()

f(['time']); edf: 8.72

f(['time'],by=a); edf: 6.693

# Let's look at just the f(time) for level b - this one looks just like before!

plot(model3,which=[1],fig_size=(single_size,single_size),prov_cols=0.1)

# However, just the prediction from the second f(time) does look a bit different

# now! In fact it represents the difference between the two conditions!

plot(model3,which=[2],fig_size=(single_size,single_size))

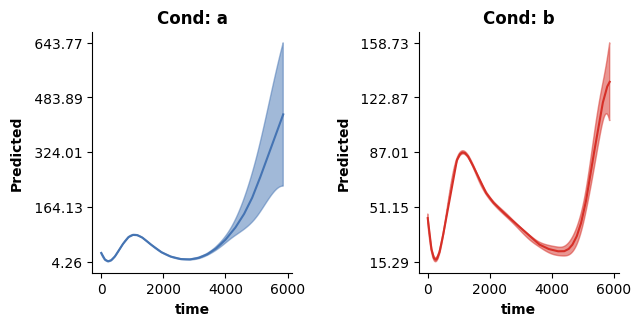

If we use all terms we can still predict how y changes over time for both conditions - and thus essentially recover the predictions from model 2! For this we again make use of the plot_fitted function, which allows to visualize the combined prediction of multiple terms included in the model (again, see the doc string!)

fig = plt.figure(figsize=(2*single_size,single_size),layout='constrained')

axs = fig.subplots(1,2,gridspec_kw={"wspace":0.1})

cols = [0.1,0.9]

titles = ["Cond: a", "Cond: b"]

for ci,c in enumerate(["a","b"]):

# Set up some new data for prediction

time_pred = [t for t in range(0,max(dat["time"]),20)]

new_dat = pd.DataFrame({"cond":[c for _ in range(len(time_pred))],

"time":time_pred})

# Now make prediction using all terms

plot_fitted(new_dat,["time"],model3,ax=axs[ci],col=cols[ci],use=[0,1,2],title=titles[ci])

plt.show()

An ordered factor model

In mgcv in R, the by keyword is sensitive to whether the factor is of type ordered or not. This is not the case in mssm, but “ordered factor models” can be implemented nonetheless. As mentioned in the previous section, the syntax for the formula is very similar to the binary model - we just need to create an additional “is_by” variable:

dat["is_a"] = (dat["cond"].values == "a").astype(int)

# We also specify a code-book to recode the model so that we get an offset term for level "a" when adding `l(["cond"])` to the model

codebook = {"cond":{"b":0,"a":1}}

formula3O = Formula(lhs=lhs("y"), # The dependent variable - here y!

terms=[i(), # The intercept, a

l(["cond"]), # An offset term - here for level a

f(["time"]), # One f(time) term - will correspond to the baseline level for condition: here let's choose b

f(["time"],by_cont="is_a")], # Another f(time), this one models the difference over time from the other f(time) term for cond=a but cannot take on a constant!

data=dat,

codebook=codebook)

/opt/hostedtoolcache/Python/3.14.2/x64/lib/python3.14/site-packages/mssm/src/python/formula.py:921: UserWarning: 3003 y values (9.32%) are NA.

warnings.warn(

model3O = GAMM(formula3O,Gaussian())

model3O.fit(progress_bar=False)

model3O.print_parametric_terms()

Intercept: 52.878, t: 211.867, DoF.: 29197, P(|T| > |t|): 0.000e+00 ***

cond_a: -14.002, t: -29.029, DoF.: 29197, P(|T| > |t|): 0.000e+00 ***

Note: p < 0.001: ***, p < 0.01: **, p < 0.05: *, p < 0.1: .

# Let's look at just the f(time) for level b - this one again looks just like before!

plot(model3O,which=[2],fig_size=(single_size,single_size),prov_cols=0.1)

# The predicted effect of the second smooth `f(["time"],by_cont="is_a")`:

plot(model3O,which=[3],fig_size=(single_size,single_size),prov_cols=0.1)

The predicted effect of the second smooth f(["time"],by_cont="is_a") looks very similar to the binary smooth from model 3 - the difference is a different offset shift. To understand what is goind on, remember that both f(["time"],by_cont="is_a") in model 3O and f(["time"],binary=["cond","a"]) in model 3 are difference curves. The distinction is that the latter can include an offset shift, while the former does not. In model 3O, this offset shift is added back in by the l(["cond"]) term:

model3O.print_parametric_terms()

Intercept: 52.878, t: 211.867, DoF.: 29197, P(|T| > |t|): 0.000e+00 ***

cond_a: -14.002, t: -29.029, DoF.: 29197, P(|T| > |t|): 0.000e+00 ***

Note: p < 0.001: ***, p < 0.01: **, p < 0.05: *, p < 0.1: .

If you add -14.002 to the estimate for f(["time"],by_cont="is_a"), you essentially end up again with the estimated effect for f(["time"],binary=["cond","a"]). Note, that while that is true in this simple model, this is not generally the case - depending on how you set up the ordered factor smooths.

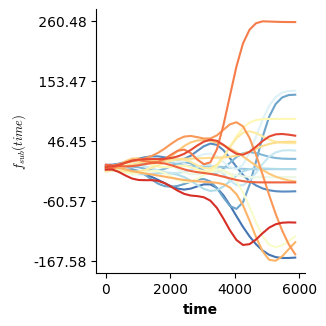

Tensor smooth interactions

Non-linear interactions between continuous variables are supported via tensor smooth interactions (see Wood, 2006; Wood, 2017). Both the behavior achieved by the ti() and te() terms in mgcv is supported. Let’s start with considering the te() term behavior!

formula4 = Formula(lhs=lhs("y"), # The dependent variable - here y!

terms=[i(), # The intercept, a

l(["cond"]), # Offset for cond='b'

f(["time","x"],by="cond",te=True)], # one smooth surface over time and x - f(time,x) - per level of cond: three-way interaction!

data=dat)

/opt/hostedtoolcache/Python/3.14.2/x64/lib/python3.14/site-packages/mssm/src/python/formula.py:921: UserWarning: 3003 y values (9.32%) are NA.

warnings.warn(

# Fit the model

model4 = GAMM(formula4,Gaussian())

model4.fit(progress_bar=False)

# We end up with two smooth terms - one surface for every level of cond.

model4.print_smooth_terms()

f(['time', 'x'],by=cond): a; edf: 7.507

f(['time', 'x'],by=cond): b; edf: 8.84

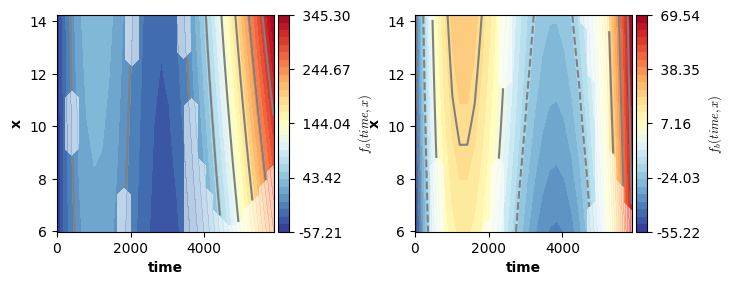

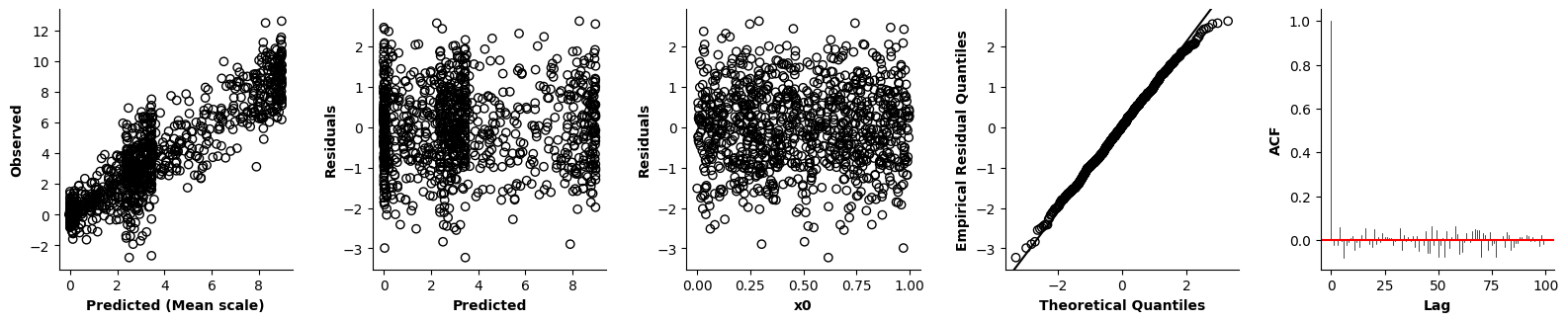

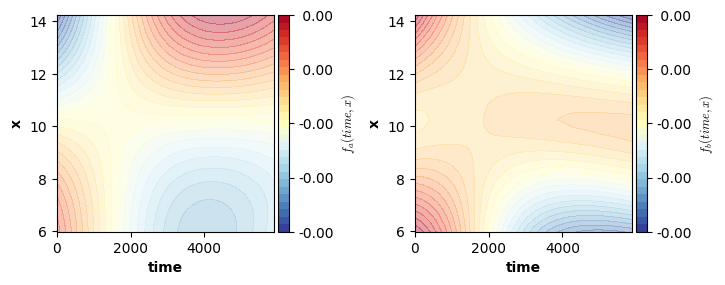

We can now visualize the smooth functions. For this we can again use the plot function. Remember: the plot function only visualizes the estimated smooth terms, it ignores all other terms. That is, in this example the smooth functions are not shifted up/down by the intercept and offset adjustment for level “b” of factor “cond”.

Note: the shaded areas in the plot below correspond to areas outside of a (by default 95%) credible interval.

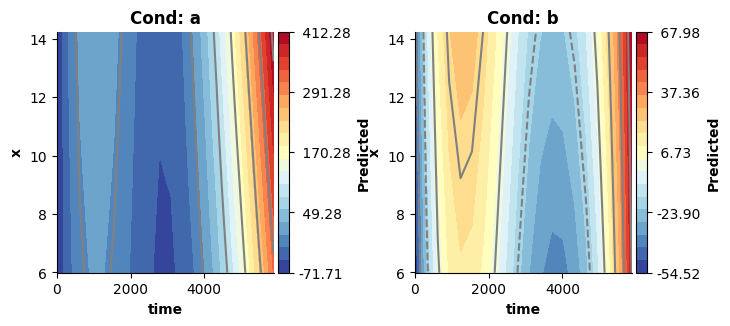

fig = plt.figure(figsize=(2*single_size,single_size),layout='constrained')

axs = fig.subplots(1,2,gridspec_kw={"wspace":0.3})

plot(model4,axs=axs)

plt.show()

The ti() term in mgcv enables decomposing smooth interactions into separate interaction (including lower order interactions) and main effects! In mssm this is achieved by omitting the te=True argument (the default). In that case, separate smooth terms have to be included for the lower order interactions, just like in mgcv:

formula5 = Formula(lhs=lhs("y"), # The dependent variable - here y!

terms=[i(), # The intercept, a

l(["cond"]), # Offset for cond='b'

f(["time"],by="cond"), # to-way interaction between time and cond; one smooth over time per cond level

f(["x"],by="cond"), # to-way interaction between x and cond; one smooth over x per cond level

f(["time","x"],by="cond")], # one smooth surface over time and x - f(time,x) - per level of cond: three-way interaction!

data=dat)

/opt/hostedtoolcache/Python/3.14.2/x64/lib/python3.14/site-packages/mssm/src/python/formula.py:921: UserWarning: 3003 y values (9.32%) are NA.

warnings.warn(

# Fit the model

model5 = GAMM(formula5,Gaussian())

model5.fit(progress_bar=False)

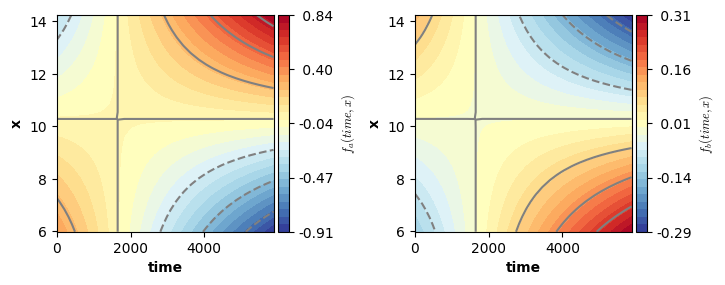

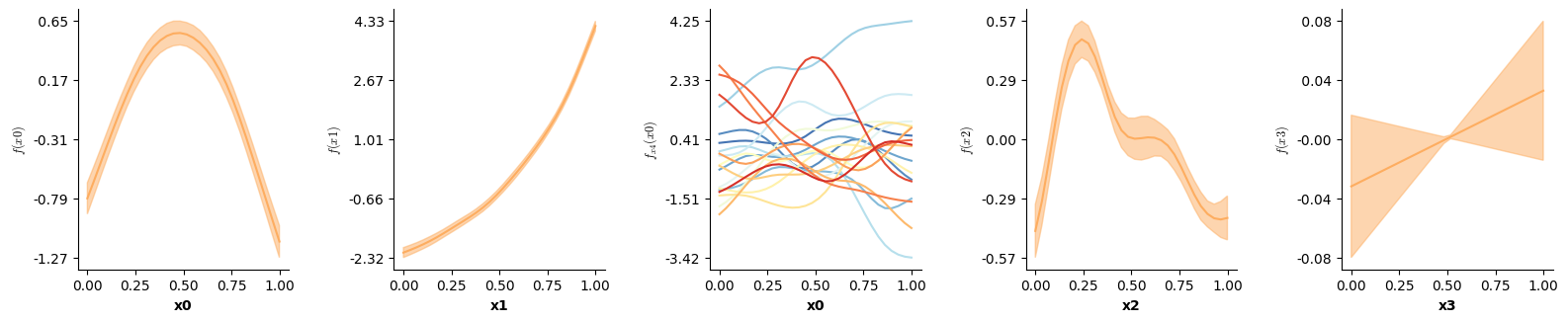

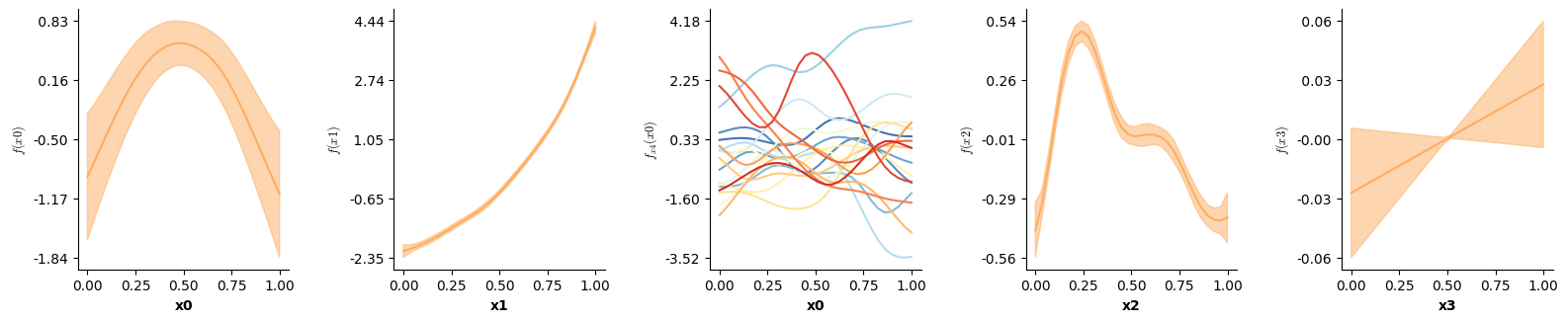

# We end up with a total of 6 smooth terms in this model!

model5.print_smooth_terms()

f(['time'],by=cond): a; edf: 6.88

f(['time'],by=cond): b; edf: 8.716

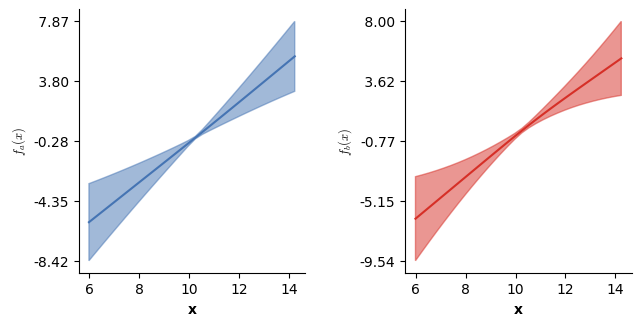

f(['x'],by=cond): a; edf: 1.042

f(['x'],by=cond): b; edf: 1.202

f(['time', 'x'],by=cond): a; edf: 1.0

f(['time', 'x'],by=cond): b; edf: 1.0

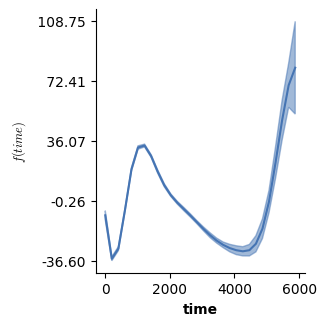

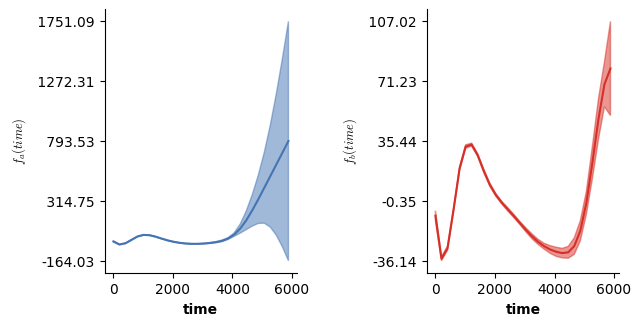

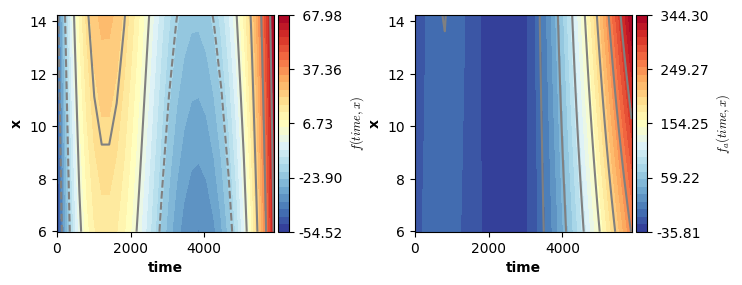

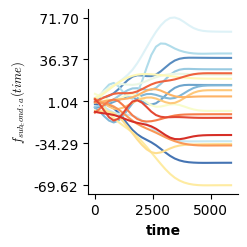

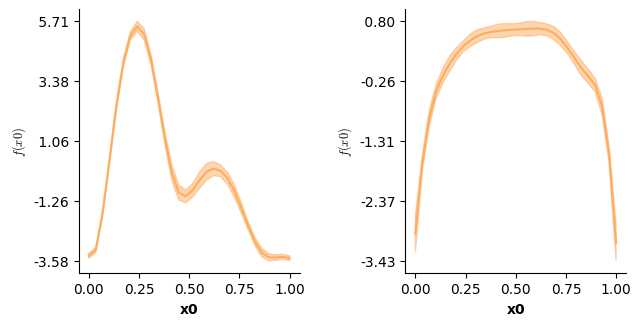

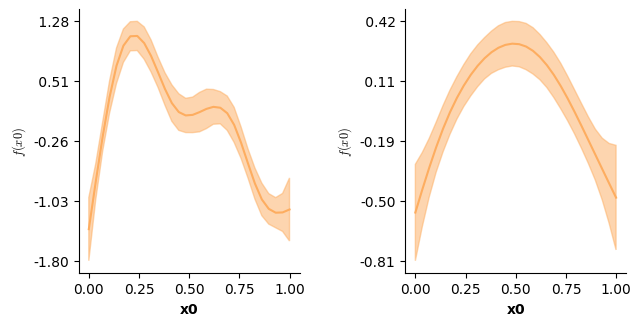

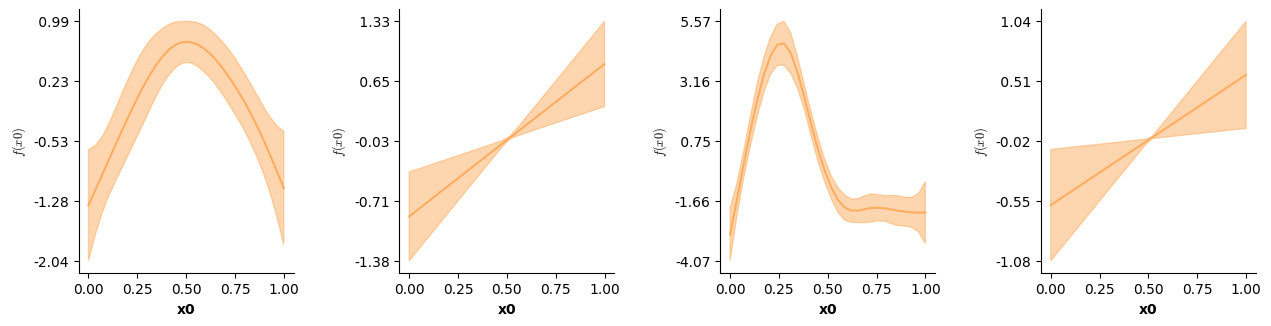

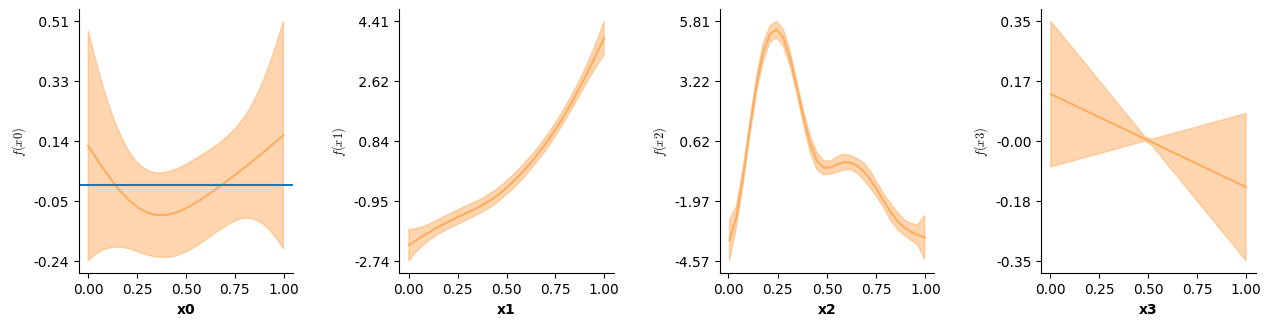

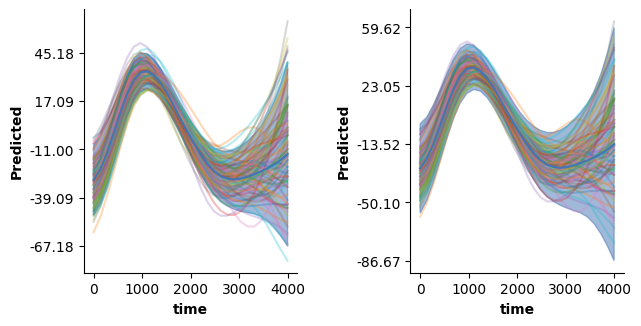

We can now visualize the partial main & interaction effects:

# Visualizing the partial main effects of time.

fig = plt.figure(figsize=(2*single_size,single_size),layout='constrained')

axs = fig.subplots(1,2,gridspec_kw={"wspace":0.1})

plot(model5,which=[2],axs=axs,ci=True)

plt.show()

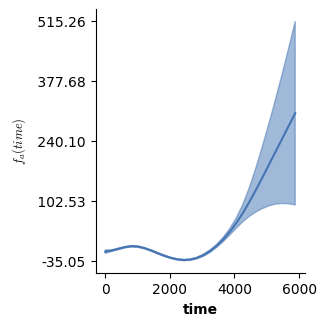

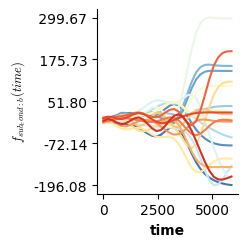

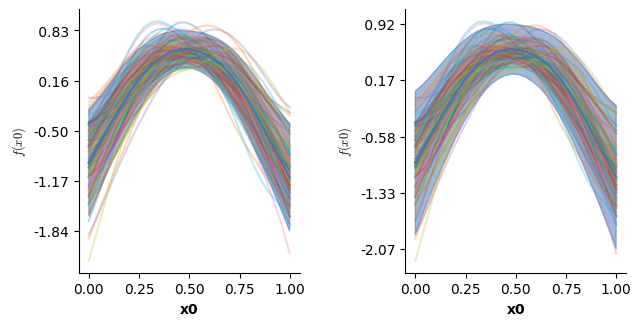

# Visualizing the partial main effects of x.

fig = plt.figure(figsize=(2*single_size,single_size),layout='constrained')

axs = fig.subplots(1,2,gridspec_kw={"wspace":0.1})

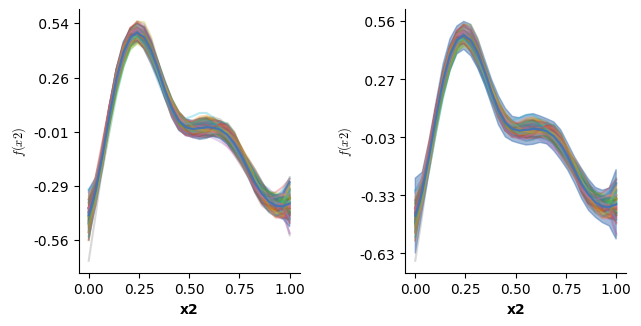

plot(model5,which=[3],axs=axs,ci=True)

plt.show()

# Visualizing the partial interaction:

fig = plt.figure(figsize=(2*single_size,single_size),layout='constrained')

axs = fig.subplots(1,2,gridspec_kw={"wspace":0.3})

plot(model5,which=[4],axs=axs,ci=False)

plt.show()

Note, that these surfaces really covers only the three-way interaction estimates (ignoring the main effects). Hence, they look very different from the previous plots. However, we can still recover (approximately) the surfaces estimated by model 4, when visualizing the three-way interaction + the two-way interactions!

In this case, the added interactions produce surfaces comparable to those obtained from model 4. This is not guaranteed, and in practice models set up like model 5 will tend to produce more flexible solutions - because they have a larger solution space, in which they might find evidence for a more complex solution (see Wood, 2017).

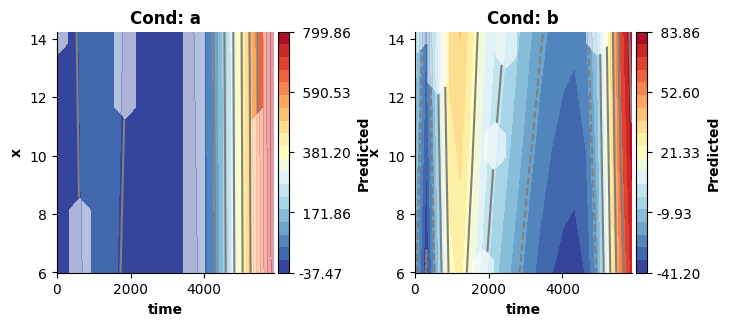

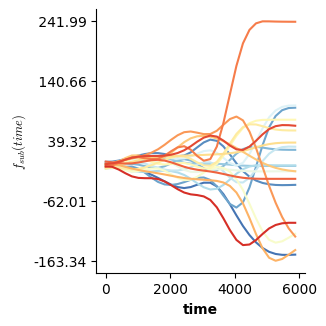

# Visualizing the three-way interaction + the two-way interactions approximates the surfaces estimated by model4, which did not attempt to decompose these.

fig = plt.figure(figsize=(2*single_size,single_size),layout='constrained')

axs = fig.subplots(1,2,gridspec_kw={"wspace":0.3})

titles = ["Cond: a", "Cond: b"]

# Set up some new data for prediction

time_pred = []

x_pred = []

xr = [t for t in np.linspace(min(dat["x"]),max(dat["x"]),20)]

tr = np.linspace(0,max(dat["time"]),len(xr))

for t in tr:

for x in xr:

time_pred.append(t)

x_pred.append(x)

# Loop over conditions

for ci,c in enumerate(["a","b"]):

new_dat = pd.DataFrame({"x":x_pred,

"cond":[c for _ in range(len(x_pred))],

"time":time_pred})

plot_fitted(new_dat,["time","x"],model5,ax=axs[ci],use=[2,3,4],title=titles[ci])

plt.show()

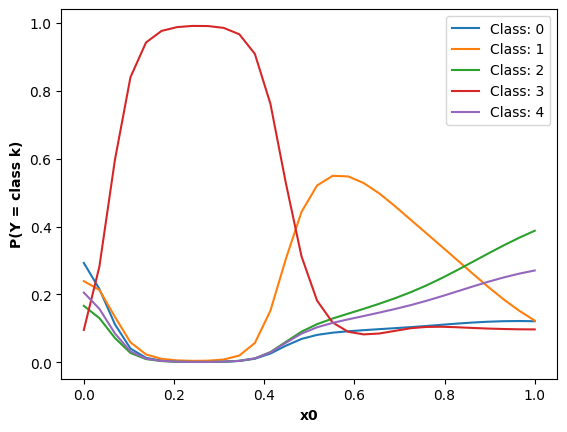

Binary tensor smooth interactions

The binary smooth approach generalizes beyond the univariate case to the tensor smooth interaction case. Essentially, we then fit a smooth surface for the reference level and difference surfaces for the remaining levels of a factor. Such a model is set up below.

formula6 = Formula(lhs=lhs("y"), # The dependent variable - here y!

terms=[i(), # The intercept, a

f(["time","x"],te=True), # one smooth surface over time and x - f(time,x) - for the reference level = cond == b

f(["time","x"],te=True,binary=["cond","a"])], # another smooth surface over time and x - f(time,x) - representing the difference from the other surface when cond==a

data=dat)